How convenient would it be to have access in the palm of your hand to an on-call radiologist? Researchers hope to achieve that goal with a chatbot app that can answer questions from healthcare providers. They unveiled the app at this week's Society of Interventional Radiology (SIR) 2017 annual meeting.

The chatbot uses artificial intelligence to create a virtual interventional radiology consultant that provides evidence-based answers to frequently asked questions. The interaction is done in a conversational style, beginning with a text message similar to how people communicate with friends and colleagues.

Dr. Edward Lee, PhD, from UCLA.

Dr. Edward Lee, PhD, from UCLA."We believe this brings a benefit to both radiologists and especially general clinicians who can get the information they need in a super-quick way," said app co-developer Dr. Edward Lee, PhD, from the David Geffen School of Medicine at the University of California, Los Angeles (UCLA). "Radiologists can also benefit because they [will] spend less time answering routine questions and more time focusing on taking care of their patients."

Watson's contribution

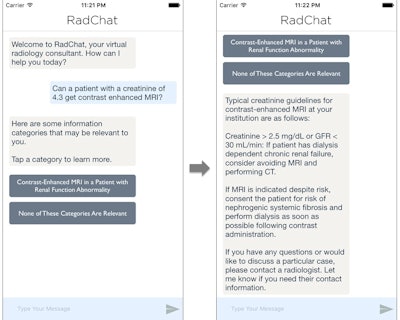

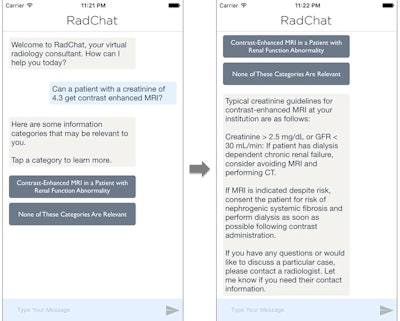

The chatbot app, dubbed RadChat, answers questions such as whether a patient could tolerate a contrast-enhanced scan or which modality is best to address a particular medical problem.

"There are all kinds of routine questions that we deal with day to day, all of which can be automated within our application," Lee said.

To build the chatbot, the researchers used natural language processing (NLP) technology powered by IBM's Watson. The deep-learning technology is designed to enable RadChat to understand a wide range of questions and respond intelligently.

"In deep learning, the artificial neurons in a neural network process large datasets to discover patterns, learn from these patterns, and respond without direct human intervention," he explained.

Lee and colleagues gathered more than 3,000 sample data points that simulate common queries received by interventional radiologists during consultations, as well as the corresponding correct answers. As such, when a clinician asks a question, the app can understand the query and almost instantly provide an intelligent response.

The Q&A between a clinician and RadChat is similar to texting a colleague. Image courtesy of Dr. Edward Lee, PhD.

For example, say a clinician wanted to know if a patient with a creatinine level of 4.3 can undergo a contrast-enhanced MRI scan. RadChat's response would be to provide the creatinine limits under which such an exam should be avoided or allowed.

"This kind of information is super valuable because it is all carefully created from the radiology literature by a team of academic radiologists and is evidenced-based," Lee said. "The requesting clinicians not only get an answer rapidly, but they get an answer that is supported by the most cutting-edge medical data."

RadChat also could help determine which imaging studies are appropriate for a given clinical scenario or whether an implant is MRI-compatible.

Complex cases

Of course, there will questions that require more than a simple "yes" or "no" or a numbers-driven reply on how to handle a medical situation. When a question is more complex and additional information is required, RadChat is directed to contact a human radiologist for his or her interpretation as quickly as possible to facilitate patient care.

Dr. Kevin Seals from UCLA.

Dr. Kevin Seals from UCLA."If something comes up that is not perfectly understood or has more ambiguity, that would be a case where a user would be referred to a human interventional radiologist," said co-developer Dr. Kevin Seals, a radiology resident at UCLA. "In cases where we [already] have excellent data and the answer to a particular clinical issue is very clear, that is when automaton is appropriate and we want the application to take care of it."

There is a fail-safe feature as well. If a clinician receives an answer from RadChat that is questionable or irrelevant, the clinician can specify that the chatbot has failed. This feedback is used to help the app learn from its mistakes and continually improve the program.

"We can analyze the information from when the program failed and use it to make the application better," Lee said.

All queries are stored in RadChat's database and fed into the app's neural network for additional training to make the chatbot more intelligent. The stored data could also help provide ideas for new functions within the application.

Clinical use

Currently, a small team of hospitalists, interventional radiologists, and radiation oncologists at UCLA are using RadChat. So far, they've found the app to be helpful and compare it to chatting with a friend on a cellphone.

"Right now, we are in the final aspects of the development phase and have a number of test users at the hospital who are using it across a number of specialties," Seals said. "They are working with us to develop it and give us feedback on what they love and what we need to add."

If and when RadChat is ready for prime time, the developers believe the technology will jumpstart additional chatbots suitable for other clinical applications.

"It would be quite easy to translate this framework into a cardiology chatbot, a neurosurgery chatbot, or anything else we could potentially create," Lee said.

Because the chatbot is still in its infancy, the RadChat developers have yet to sign with a vendor to market the technology.

"Once we get this [app] into multiple institutions and a trial phase, then we will potentially approach vendors or investors or potentially our own company to maybe market this more," Lee said. "We have some interest from international vendors as well."