Artificial intelligence (AI) software can accurately identify tuberculosis (TB) on chest radiographs, offering the potential to serve as an inexpensive or even free method to screen for the often deadly disease in underserved countries, according to a study published online April 25 in Radiology.

A research team from Thomas Jefferson University Hospital in Philadelphia found that a combination of two types of an artificial intelligence algorithm called a deep convolutional neural network could detect tuberculosis with 96% accuracy. What's more, the group determined that performance could be improved even further if a cardiothoracic radiologist read the few cases that had discordant results on the two AI models.

"AI technologies based in deep learning have great potential to classify certain diseases on medical imaging with high accuracy," lead author Dr. Paras Lakhani told AuntMinnie.com.

A treatable disease

Tuberculosis is a treatable disease that's the leading cause of death by infectious disease worldwide. Unfortunately, some regions of the world are faced with a relative shortage of radiologists who can interpret chest x-rays and a high demand for those services -- especially in areas that have a prevalence of TB, according to Lakhani. Cost issues also can be a challenging factor.

"In poverty-stricken regions, it may be even too expensive to pay a few dollars for an interpretation, and an automated solution based on AI could be provided for free or practically no cost," Lakhani said.

To help meet this demand, the Thomas Jefferson team turned to deep convolutional neural networks -- a form of deep learning that can be automatically trained with image data to be able to perform image classification tasks. These types of AI algorithms have previously been found in other studies to offer promise in several image analysis applications in radiology, potentially improving on the performance of traditional computer-aided detection software, according to the study authors (Radiology, April 25, 2017).

In their project, the researchers trained and tested models of these deep convolutional neural networks that were based on two different architectures: AlexNet (designed by Alex Krizhevsky in 2012) and GoogLeNet (developed by Google).

The group used models of AlexNet and GoogLeNet that had not received any training prior to this project, as well as models that had been pretrained on the ImageNet image database of 1.2 million photographs. The researchers then evaluated the performance of these various models, both alone and combined together in an ensemble approach.

To train the neural networks to learn to how to detect TB, the researchers used 685 posteroanterior chest radiographs that were assembled from four sources of data: two publicly available datasets from the U.S. National Institutes of Health (NIH), a dataset from Thomas Jefferson University, and a dataset from the Belarus Tuberculosis Portal.

While all images were augmented with the use of random cropping, mean subtraction, and mirror images, some of the neural network models also received training images that had been further augmented with additional image transformations in hopes of enhancing accuracy. Performed using version 1.5i of the NIH's ImageJ software, this additional augmentation included image rotation of 90°, 180°, and 270°, as well as extra image processing to improve image contrast.

Validation of the various neural network models was then performed on an additional 172 cases, while the remaining 150 cases were reserved for testing. Of this test set, 75 were normal and 75 were positive for TB. After all the neural networks had been trained, the test cases were utilized to assess the performance of the various models for accurately identifying TB.

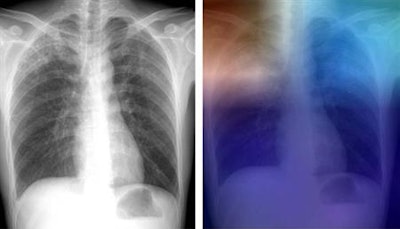

Left: Posteroanterior chest radiograph shows upper lobe opacities with pathologic analysis-proven active TB. Right: Same chest radiograph, with a heat map overlay of one of the strongest activations obtained from the fifth convolutional layer after it was passed through the GoogLeNet-TA classifier. The red and light blue regions in the upper lobes represent areas activated by the deep neural network. The dark purple background represents areas that are not activated. This shows that the network is focusing on parts of the image where the disease is present (both upper lobes). Image courtesy of RSNA.

Left: Posteroanterior chest radiograph shows upper lobe opacities with pathologic analysis-proven active TB. Right: Same chest radiograph, with a heat map overlay of one of the strongest activations obtained from the fifth convolutional layer after it was passed through the GoogLeNet-TA classifier. The red and light blue regions in the upper lobes represent areas activated by the deep neural network. The dark purple background represents areas that are not activated. This shows that the network is focusing on parts of the image where the disease is present (both upper lobes). Image courtesy of RSNA.The researchers performed receiver operating characteristic (ROC) analysis to compare performance and determine optimal sensitivity and specificity values for combining the two types of neural networks into an ensemble to achieve higher accuracy.

High sensitivity and specificity

Among the various types of neural networks used in the study, an ensemble of previously trained models of AlexNet and GoogLeNet that had also received additional image augmentation performed the best, yielding excellent sensitivity and specificity. ROC analysis also showed an area under the curve of 0.99.

In the 13 test cases in which the AlexNet and the GoogleNet models disagreed, an independent board-certified cardiothoracic radiologist blindly reviewed the studies and provided a final interpretation. The radiologist correctly interpreted all 13 cases, yielding a significant improvement in accuracy.

| Performance in identifying TB cases | ||

| Ensemble of GoogLeNet and AlexNet deep-learning algorithms | Ensemble of algorithms with cardiothoracic radiologist interpretation of discordant results | |

| Sensitivity | 97.3% | 97.3% |

| Specificity | 94.7% | 100% |

| Accuracy | 96.7% | 98.7% |

The deep-learning algorithms both had three false-negative findings, which did not make it to the radiologist for the review given the protocol of the study.

Current status

The AI software is currently available for use, Lakhani said. The next step is to deploy it in a TB-prevalent region and assess workflow strategies for how it could be used.

"I am particularly interested in workflows where AI is combined with radiology-expert opinion, which may have potential for greater accuracy," Lakhani said. "Another potential workflow idea is that AI handles the 'easy' cases, and the 'tough" ones are sent to a radiologist for a combined AI/radiology read. In the future, I would love to further work on this."

Lakhani added that he is happy to share or collaborate with anyone who would like to make good use of the technology.