A newly tested computer program shows excellent potential for improving the accuracy of breast-disease diagnosis -- without involving the practice changes of a computer-aided detection (CAD) reading system.

In its first practical tryout, reported in the latest American Journal of Roentgenology, a program developed at Stanford University of Stanford, CA, automatically measured the concordance of mammographic findings and biopsy results in a series of 92 consecutive patients.

The computer system achieved 100% sensitivity -- outperforming a panel of radiologists from the University of California, San Francisco who had reviewed the cases earlier -- by identifying the one patient whose discordantly benign pathology results proved incorrect at 11-month follow-up (AJR, February 2004, Vol. 182:2, pp. 481-488).

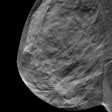

The 49-year-old patient’s mammogram showed clustered, pleomorphic microcalcifications, but her biopsy results diagnosed only fat necrosis. The radiologists who reviewed her case weren’t privy to the computer system’s analysis, which found a 70% probability of biopsy error based on the patient’s mammographic findings and history. A subsequent mastectomy found seven foci of ductal carcinoma in situ.

Experts say radiologists should always correlate biopsy results with mammographic findings to ensure that a benign biopsy makes sense and isn’t the result of sampling error, e.g., the biopsy needle having missing the intended area of interest.

While identifying one additional cancer among 92 biopsies may be a modest yield, mammographers would probably welcome a program that supplemented their knowledge and experience by automatically flagging the most likely errors.

"You want to make sure that you take every precaution not to miss a breast cancer or delay a diagnosis," said lead study author Dr. Elizabeth Burnside in an interview with AuntMinnie.com. "Having a computer to see if it agrees with the impression of the human is always nice, because computers have different talents than humans do."

Burnside, who is now chief of breast imaging at the University of Wisconsin Medical School in Madison, developed the automated system while obtaining her master’s degree in medical informatics at Stanford.

The concept behind the system is fairly straightforward. It calculates the probability of cancer in an individual patient based on her patient history and mammographic findings, using the probabilities for those characteristics found in the medical literature.

That said, developing the Bayesian network to make such calculations required two years of effort by Burnside and her Stanford colleagues, which probably explains why such a system hasn’t been developed previously.

In the study at UCSF, Burnside tested the expert system on a common conundrum in breast imaging: up to 6.2% of biopsies reflect discordant results, yet 70% of the cancers that would be missed can be caught by careful correlation.

Based on patient characteristics, the computer flagged nine out of the 63 benign biopsy results in the series as having at least a 40% probability of sampling error. While only one of the nine proved to be sampling error at follow-up, the system still maintained a specificity of 91%.

"More than half of the patients in our study had a probability of sampling error of less than 1%," the authors wrote. "On the other hand, our system also reliably predicted which results were likely to be discordant and therefore likely to require careful review by the radiologists."

While the UCSF radiologists didn’t find discordance in the one case that turned out to be erroneous biopsy, they were able to correctly dismiss other cases that the computer found suspicious.

"Radiologists have the ability to take into account factors that are not modeled by our Bayesian network," the authors noted. "For example, an abnormality found in the axillary tail of a 48-year-old woman may have been seen in retrospect to have a small fatty notch, a characteristic typical of an intramammary lymph node."

"I think when we test this on a larger number of patients, we’ll find that both the humans and the expert system perform well," said Burnside, who is seeking large structured-reported datasets for testing.

"It’s just a matter of performing well on different cases," Burnside said of radiologists versus computers. "They’ll perform even better in combination."

By Tracie L. ThompsonAuntMinnie.com staff writer

January 29, 2004

Related Reading

Racial disparities in breast cancer outcomes spark research, October 10, 2003

Radiotherapy benefits patients with DCIS, July 11, 2003

Breast Imaging: A Correlative Atlas, February 4, 2003

Contrast MRI improves cancerous lymph node detection, misses some primary tumors, October 31, 2002

Teaching Atlas of Mammography, July 3, 2002

Copyright © 2004 AuntMinnie.com