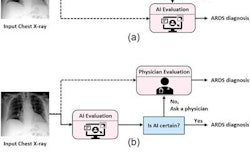

An artificial intelligence (AI) algorithm has been trained to achieve expert physician-level performance when diagnosing acute respiratory distress syndrome (ARDS) on chest x-rays. Results of the study were published online April 20 in Lancet Digital Health.

The research represents a potential new approach to support patients with ARDS who often go underdiagnosed due to variability in x-ray interpretation.

"Despite research investment, current treatment for ARDS remains largely supportive and mortality remains at 35%. Patients who develop ARDS often go unrecognized and do not receive evidence-based care," wrote lead author Dr. Michael Sjoding from University of Michigan Medical School and colleagues.

Acute respiratory distress syndrome is characterized by the acute onset of severe hypoxemia and lung edema of noncardiac cause in patients with conditions such as sepsis, pneumonia, or trauma. Intensivists in clinical practice have poor agreement when identifying ARDS findings on chest radiographs and ARDS clinical research study coordinators do little better, the authors stated.

In this study, the researchers sought to train a deep convolutional neural network (CNN) to detect ARDS findings on chest radiographs. The training dataset included all consecutive patients admitted to the University of Michigan between January 1, 2016, and June 30, 2017, who developed acute hypoxemic respiratory failure, defined as a PaO2/FiO2 less than 300 while on one of the following respiratory support modalities: invasive mechanical ventilation, noninvasive ventilation, or heated high-flow nasal cannula. ARDS is defined by the patient's oxygen in arterial blood (PaO2) to the fraction of the oxygen in the inspired air (FiO2). ARDS patients have a PaO2/FiO2 ratio of less than 300.

Each chest radiograph was independently reviewed for the presence of ARDS by at least two physicians trained in critical care medicine with an interest in ARDS research.

The CNN algorithm detected ARDS with an area under the receiver operator characteristics curve (AUROC) of 0.92. In a subgroup of 413 images reviewed by at least six physicians, the CNN's AUROC was 0.93, with a sensitivity of 83% and specificity of 88.3%.

Among images with zero of six ARDS annotations (n = 155), the median CNN probability was 11%, with six (4%) assigned a probability above 50%. Among images with six of six ARDS annotations (n = 27), the median CNN probability was 91%, with two (7%) assigned a probability below 50%. In an external cohort of 958 chest radiographs from 431 patients with sepsis, the AUROC was 88. When radiographs annotated as equivocal were excluded, the AUROC was 93.

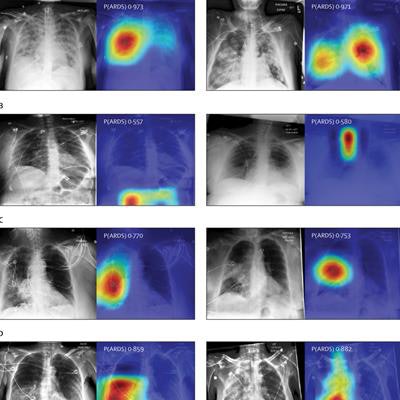

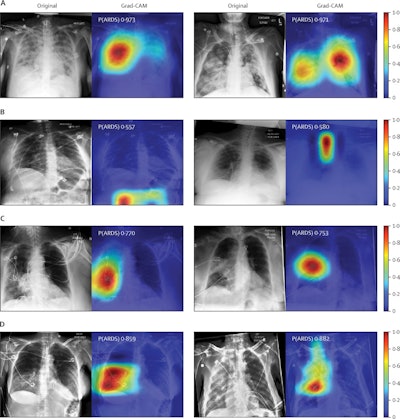

Chest radiographs were grouped based on CNN probabilities of ARDS and physician ARDS annotations; gradient-weighted class activation mapping (Grad-CAM) was then used to localize areas used by the CNN to identify ARDS within the radiographs. The heat map illustrates the importance of local areas within the image for classification. The importance value is scaled between 0 and 1 where a higher number indicates that the area is of higher importance for classifying the image as consistent with ARDS. (A) Chest radiographs annotated as ARDS by six of six physicians and assigned a high CNN probability. (B) Chest radiographs scored as consistent by six of six physicians but assigned a lower probability by the CNN. (C) Chest radiographs annotated as ARDS by zero of six physicians but assigned a high probability by the CNN. (D) Chest radiographs with disagreement among physicians (three of six physicians annotating ARDS) and assigned a high probability by the CNN. P(ARDS): probability that the chest radiograph is consistent with ARDS. Image courtesy of Lancet Digital Health.

Chest radiographs were grouped based on CNN probabilities of ARDS and physician ARDS annotations; gradient-weighted class activation mapping (Grad-CAM) was then used to localize areas used by the CNN to identify ARDS within the radiographs. The heat map illustrates the importance of local areas within the image for classification. The importance value is scaled between 0 and 1 where a higher number indicates that the area is of higher importance for classifying the image as consistent with ARDS. (A) Chest radiographs annotated as ARDS by six of six physicians and assigned a high CNN probability. (B) Chest radiographs scored as consistent by six of six physicians but assigned a lower probability by the CNN. (C) Chest radiographs annotated as ARDS by zero of six physicians but assigned a high probability by the CNN. (D) Chest radiographs with disagreement among physicians (three of six physicians annotating ARDS) and assigned a high probability by the CNN. P(ARDS): probability that the chest radiograph is consistent with ARDS. Image courtesy of Lancet Digital Health.Visual evaluation of the algorithm outputs confirmed that it learned to focus on regions of the lung that exhibited opacities when classifying images as consistent with ARDS. Performance of the algorithm was consistent with or higher than individual physician performance, the researchers wrote.

The authors noted a few study limitations. The external test set was annotated using an alternative method useful in ARDS translational research and was perhaps less optimal for algorithm evaluation. The test set did not include exact time stamps of ARDS onset, which prevented an assessment of possible detection delay, for instance.

Nonetheless, the results illustrate the power of a deep-learning model to accurately identify chest radiographs consistent with ARDS, the researchers wrote.

"Further research is needed to evaluate how the use of these algorithms could support real-time identification of ARDS patients to ensure fidelity with evidence-based care or to support ongoing ARDS research," they concluded.