Nonradiologist physicians participating in an artificial intelligence (AI) reader study achieved higher diagnostic accuracy for reading chest x-rays after receiving explainable AI advice, according to researchers.

A group led by Susanne Gaube, PhD, of Ludwig Maximilian University of Munich, Germany, explored whether AI explanations of findings on patient x-rays affect the diagnostic decisions of physicians with different levels of task expertise. Internal medicine and emergency medicine physicians saw a significant improvement in chest x-ray reading performance after receiving AI annotations. Radiologists also had a slight improvement in performance, but the difference was not statistically significant. They did, however, gain higher diagnostic confidence.

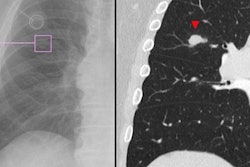

"The results indicate that having an explanation (i.e., annotation on the x-ray indicating the area that determined the prediction) as part of the advice positively affected physicians' diagnostic accuracy and their quality rating," the group wrote. The study was published online January 25 in Scientific Reports

Moreover, both groups ranked advice provided by an AI algorithm higher than that given by a human expert, the authors noted.

AI-generated clinical advice is becoming more prevalent in healthcare, with an array of certified AI-enabled clinical decision-support systems available on the market. Many models developed for radiology tasks have shown excellent performance equal to or even surpassing human experts, but few studies have investigated the actual clinical impact of these products, according to the authors.

Previous research has shown that providing case-by-case AI explanations on x-rays can increase trust in and reliance on AI advice, even when the advice is incorrect. However, it is less well understood how the "explainability" of advice affects users with different levels of task expertise, the group added.

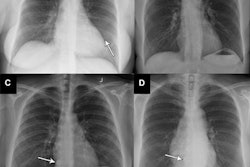

To elucidate this issue, the researchers presented a set of eight chest x-rays via an external DICOM viewer to 223 physicians with different levels of task expertise. Radiologists (n = 106) were considered task experts and internal medicine and emergency medicine physicians (n = 117) were considered non-task experts.

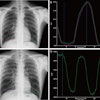

The researchers manipulated whether the x-rays came with or without a visual annotation and whether they were labeled as coming from an AI or a human radiologist. The participating physicians were asked to rate the quality of the presented diagnostic advice, give a final diagnosis, and judge how confident they were with their diagnosis.

Overall, participants showed a higher diagnostic accuracy when they received advice with an explanation. Specifically, nonexperts performed 5.66% better and experts 3.41% better when they received advice labeled as coming from the AI compared with advice from a human expert, according to the analysis. But only the non-expert performance improvement reached statistical significance (p = 0.042).

In addition, radiologists reported 0.32 points higher mean confidence (on a 7-point Likert scale) in their final diagnosis when they received advice supposedly labeled as coming from the AI versus the human, and nonradiologist physicians rated their mean confidence 0.06 points higher.

"Surprisingly, the advice quality rating was only marginally affected by both sources of advice, which means that we neither found convincing evidence for algorithmic appreciation nor for algorithmic aversion," the group wrote.

The study is among the first to explore the question of whether AI explanations affect the diagnostic decisions of physicians with different amounts of task expertise, the group noted.

Ultimately, the findings underline the idea that non-task experts such as internal medicine and emergency medicine physicians may especially benefit from implementing this type of AI software for image reviewing tasks, they suggested.

"Further research should focus on how explainable AI advice has to be presented to non-task experts to optimize utility while minimizing blind over-reliance in the event that the [AI software] errs," Gaube and colleagues concluded.