Nurses with no training in ultrasound were able to acquire diagnostic-quality echocardiography images thanks to the guidance of an artificial intelligence (AI)-based software application, according to a study published February 18 in JAMA Cardiology.

In the prospective study of 240 patients at academic medical centers, nurses were able to acquire images of high quality using a commercially available software application (Caption Guidance, Caption Health) installed on a portable ultrasound system. Images were judged to be of diagnostic quality on four key endpoints: quality for ventricular size, assessment of left ventricular size and function, right ventricular size and function, and presence of pericardial effusion.

"The ability to provide echocardiography outside the traditional laboratory setting is largely limited by a lack of trained sonographers and cardiologists to acquire and interpret images," Northwestern University cardiologist Dr. James D. Thomas and colleagues noted in their report about the data. "Using this AI-based technology, individuals with no previous training may be able to obtain diagnostic echocardiographic clips of several key cardiac parameters."

The study was conducted for regulatory purposes with the U.S. Food and Drug Administration (FDA). It exceeded the requirements for accuracy and the software was cleared in February 2020 through the agency's de novo pathway for novel products.

Caption Health, formerly called Bay Labs, is positioning the Caption product platform, which includes AI-driven guidance and interpretation software, as a tool for expanding access to ultrasound technology, as it enables performance by healthcare professionals with little experience.

Echocardiography is a "highly specialized imaging tool," which at the highest level involves nine months of specialized fellowship training, the authors noted. And during the COVID-19 pandemic, the software may enable image acquisition by frontline healthcare workers, sparing sonographers from exposure to infection with SARS-CoV-2, according to the company.

The study was conducted at two academic medical centers -- Northwestern Memorial Hospital in Chicago and Minneapolis Heart Institute in Minneapolis -- with eight registered nurses conducting the echocardiography examinations. They had undergone minimal training, consisting of one hour of didactic instruction and nine practice scans on volunteer models.

Each nurse performed 30 scans with using the guidance software installed on a portable ultrasound scanner (uSmart 3200t Plus, Terason). The mean acquisition time was 30 minutes. Ten standard transthoracic echocardiography views were obtained for each patient.

Separately, scans were conducted by sonographers on the same ultrasound unit but without the AI guidance software. Then the diagnostic quality of the scans was independently evaluated by a panel of five expert echocardiographers.

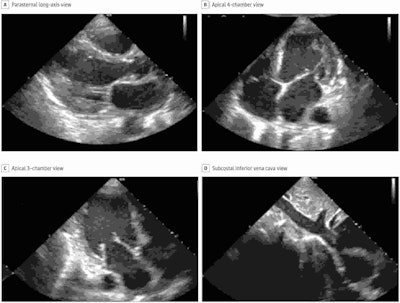

Still images of standard transthoracic echocardiographic views acquired by a nurse using the deep-learning algorithm were judged to be of diagnostic quality. Image courtesy of Caption Health.

Still images of standard transthoracic echocardiographic views acquired by a nurse using the deep-learning algorithm were judged to be of diagnostic quality. Image courtesy of Caption Health.Per the FDA, the software needed to demonstrate diagnostic quality enabling diagnosis in at least 80% of patients on four key outcome measures. The nurses' scans were of diagnostic quality in from 92.5% to 98.8% of patients (see table), with no significant difference compared with scans by sonographers.

"Our study met all FDA-prespecified primary end points, with consistent results across [body mass index] categories and cardiac pathology, including potential distractors, such as pacemakers and prosthetic valves, with little difference between the nurse and sonographer scans," Smith and colleagues wrote.

| AI-guided echocardiography, performance by nurses on four key outcome measures | |

| Endpoint | Percent, diagnostic quality |

| Left ventricular size | 98.8% |

| Global left ventricular function | 98.8% |

| Right ventricular size | 92.5% |

| Nontrivial pericardial effusion | 98.8% |

Performance of nurses was also on par with sonographers on a range of secondary endpoints. However, performance of sonographers was better than nurses when it came to determining the size of the inferior vena cava (91.5% diagnostic quality compared with 57.4%) and this is a "clear target for further algorithm development," the authors wrote.

The authors also acknowledged that limitations of the study included a relatively small number of patients and nurses and the lack of recruitment from intensive care units and emergency departments.

Smith and colleagues believe the results complement prior research, which has largely focused on the application of AI in medical imaging following the acquisition of images, as opposed to use in guiding image acquisition.

"Improvements in ultrasonography and computer hardware have led to the downsizing and cost reduction of ultrasonography machines, with handheld devices commercially available including standalone transducers interfacing with smart phones," they noted. "The [deep learning] algorithm developed in this study is relatively compact (approximately 1.5 GB) and trained on images from multiple vendors, and it therefore could be ported to work on multiple platforms."