Novice users of lung ultrasound (LUS) can successfully acquire diagnostic-quality images with AI assistance, according to research published January 15 in JAMA Cardiology.

A team led by Christiana Baloescu, MD, from Yale University in New Haven, CT, found that with AI assistance, trained healthcare professionals achieved LUS images that met diagnostic standards comparable to those of expert sonographers who do not use AI.

“This research demonstrates AI's potential to close the skills gap in lung ultrasound acquisition, making it more accessible to operators with limited training,” Baloescu told AuntMinnie.com.

While LUS is a useful tool in diagnosing patients with dyspnea, including those with cardiogenic pulmonary edema, the researchers noted that, depending on the sonographer’s expertise, image quality may vary. Adding to this variation dilemma is that hospitals in rural or remote areas may lack the resources to properly provide patient care.

Previous research suggests that AI can help with these problems, highlighting that AI could guide novice ultrasound users to acquire high-quality images needed to make a confident diagnosis. Yet much of this research has focused on AI interpretation rather than on acquisition guidance or autocapture, Baloescu said.

“Sonographers and sonologists can leverage AI-guided systems to perform lung ultrasounds confidently, especially in environments lacking experts, potentially enhancing diagnostic capabilities and patient care,” she added.

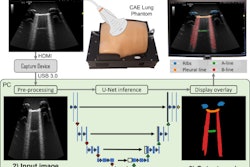

The Baloescu team studied the ability of an investigational AI software to guide users in acquiring diagnostic-quality lung ultrasound images by trained healthcare professionals who are not expert sonographers. These included medical assistants, respiratory therapists, and nurses.

The team conducted its multicenter study in 2023 on adult patients who presented with shortness of breath. The patients underwent two lung ultrasound exams, one by a non-expert with AI assistance and another by an expert lung ultrasound specialist with no AI assistance. The non-experts had AI training prior to the exams. (The AI software automatically captures images by using an 8-zone lung ultrasound protocol.)

A panel of five lung ultrasound readers who were blinded to the results provided remote review and ground truth validation. The final analysis included 176 patients with an average age of 63 years and an average body mass index (BMI) of 31. Of the total, 81 patients were female.

Overall, the non-experts performed well with AI assistance, with 98.3% of acquired images being of diagnostic quality. The researchers reported no significant difference (1.7%, p = 0.16) in quality compared with the expert-acquired images.

One “surprising” finding Baloescu noted was that the AI-assisted operators acquired higher-quality clips than experts in zone 6. This is in the left anterior inferior area, where the heart's presence can make image acquisition a challenge. Baloescu said this result indicates how AI can help acquire images in this area.

Finally, image acquisition with AI assistance took an average of 16.5 minutes for the non-experts. Non-experts who were physicians took an average of 10.8 minutes to acquire images while the non-physician professionals took an average of 18 minutes per exam.

Baloescu said while these results highlight how AI-guided LUS acquisition could be useful in the hands of novices in environments where experts are scarce, it could also potentially be used in higher-resource environments.

“For instance, nurses or community health workers could use AI-guided LUS to screen for lung conditions such as pneumonia, pulmonary edema, or pleural effusions, with the captured clips reviewed remotely by a centralized physician,” she said. “This approach is particularly impactful when paired with portable ultrasound devices.”

Baloescu added that future research aims to combine AI algorithms for image guidance and auto-capture with advanced capabilities for detecting lung pathologies such as B-lines, pleural effusions, consolidations, and pleural line abnormalities.

“We would love to see how these integrated guidance and interpretation algorithms work in diverse practice settings to evaluate their real-world effectiveness and usability,” she told AuntMinnie.com.

The full study can be found here.