An explainable AI model for cancer detection accurately outlined breast tumor location at breast MRI, according to research published July 15 in Radiology.

A team led by Felipe Oviedo, PhD, from Microsoft’s AI for Good Lab in Redmond, Washington, also found that its AI model outperformed commonly used models in both high- and low-cancer prevalence situations.

"This model really excels in imbalanced datasets, which is what we have in the scenario of breast screening, where the event of a cancer being detected is fairly rare," corresponding author Savannah Partridge, PhD, from the University of Washington in Seattle, told AuntMinnie. "It occurs in about 2% to 3% of exams, and that’s what we had here on our dataset that we trained the model on."

While MRI is more sensitive than conventional mammograms, the modality is limited by its higher costs and false-positive rates. Breast radiologists continue to explore ways that AI can help address MRI’s limitations, with previous studies highlighting higher accuracy and better efficiency with AI’s use.

The researchers, however, pointed out that existing AI models have not gone through rigorous evaluation in populations with low cancer prevalence. They also noted that existing models fall short in terms of interpretability.

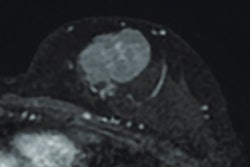

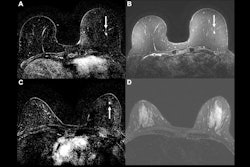

![A method overview of the explainable AI model. (A) Diagram shows deep explainable anomaly detection at breast MRI. A maximum intensity projection (MIP) of a breast is passed to a fully convolutional neural network model trained using an explainable anomaly detection loss function (fully convolutional data description [FCDD]). The model directly outputs an up-sampled heat map of the anomalous pixels (anomaly heat map). The mean anomaly score across all pixels is used to classify a case as abnormal (malignant) or not. (B) Diagram shows a conceptual comparison between binary classification and anomaly detection: Shading represents the learned normal (blue) and abnormal (red) feature spaces, and dots represent individual normal (blue) and abnormal (red) cases. Binary classification learns a classification boundary between normal and abnormal cases, but model performance is affected by the scarcity and variability of malignant cases. Anomaly detection focuses on robustly learning the normal class and aims to identify abnormal cases accurately under these conditions.](https://img.auntminnie.com/mindful/smg/workspaces/default/uploads/2025/07/screenshot-2025-07-15-061851.GG8mtgLbKy.png?auto=format%2Ccompress&fit=max&q=70&w=400) A method overview of the explainable AI model. (A) Diagram shows deep explainable anomaly detection at breast MRI. A maximum intensity projection (MIP) of a breast is passed to a fully convolutional neural network model trained using an explainable anomaly detection loss function (fully convolutional data description [FCDD]). The model directly outputs an up-sampled heat map of the anomalous pixels (anomaly heat map). The mean anomaly score across all pixels is used to classify a case as abnormal (malignant) or not. (B) Diagram shows a conceptual comparison between binary classification and anomaly detection: Shading represents the learned normal (blue) and abnormal (red) feature spaces, and dots represent individual normal (blue) and abnormal (red) cases. Binary classification learns a classification boundary between normal and abnormal cases, but model performance is affected by the scarcity and variability of malignant cases. Anomaly detection focuses on robustly learning the normal class and aims to identify abnormal cases accurately under these conditions.RSNA

A method overview of the explainable AI model. (A) Diagram shows deep explainable anomaly detection at breast MRI. A maximum intensity projection (MIP) of a breast is passed to a fully convolutional neural network model trained using an explainable anomaly detection loss function (fully convolutional data description [FCDD]). The model directly outputs an up-sampled heat map of the anomalous pixels (anomaly heat map). The mean anomaly score across all pixels is used to classify a case as abnormal (malignant) or not. (B) Diagram shows a conceptual comparison between binary classification and anomaly detection: Shading represents the learned normal (blue) and abnormal (red) feature spaces, and dots represent individual normal (blue) and abnormal (red) cases. Binary classification learns a classification boundary between normal and abnormal cases, but model performance is affected by the scarcity and variability of malignant cases. Anomaly detection focuses on robustly learning the normal class and aims to identify abnormal cases accurately under these conditions.RSNA

Oviedo, Partridge, and colleagues developed their explainable AI model to detect breast cancer on MRI exams, aiming for the model to be effective in both high- and low-cancer-prevalence settings.

The retrospective analysis included 9,738 breast MRI exams from a single institution collected between 2005 and 2022. The team also performed external testing in a publicly available multicenter dataset, consisting of 221 exams.

It used 9,567 consecutive MRI exams to develop its explainable, fully convolutional data description anomaly detection model with the goal of finding malignancies on contrast-enhanced MRI scans.

The researchers evaluated the model’s performance in three cohorts: grouped cross-validation for both balanced (20% malignant) and imbalanced (1.85% malignant) detection tasks, an internal independent test set (171 exams), and an external dataset.

The FCCD outperformed the benchmark binary-cross entropy model in cross-validation for balanced and imbalanced detection tasks, among other scenarios.

Comparison between FCCD, benchmark models (measured by area under the receiver operating curve [AUC]) | |||

Setting | Benchmark | FCCD | p-value |

Balanced detection tasks | 0.81 | 0.84 | < 0.001 |

Imbalanced detection tasks | 0.69 | 0.72 | < 0.001 |

Balanced detection tasks (internal test set) | 0.72 | 0.81 | < 0.001 |

Imbalanced detection tasks (internal test set) | 0.76 | 0.78 | < 0.02 |

Spatial agreement with reference-standard annotations (internal test set) | 0.81 | 0.92 | < 0.001 |

Balanced detection task (external test set) | 0.79 | 0.86 | < 0.001 |

At a fixed 97% sensitivity in the imbalanced setting, the model achieved an average specificity of 13% across folds for the FCDD compared with 9% for the benchmark model (p = 0.02).

Partridge said that with these results in mind, the model could improve clinical general breast MR screening and triaging, as well as serve as a second reader for radiologists.

"It really relies on fairly little imaging information from the whole diagnostic exam," she told AuntMinnie. "We can apply this tool to both abbreviated breast MRI exams as well as full diagnostic exams, so in that way, it can help with reducing scan and interpretation times."

The findings add to the discussion on explainable AI models for detecting breast cancer at contrast-enhanced MRI, according to an accompanying editorial by Min Sun Bae, MD, PhD, from Korea University in Seoul and Sungwon Ham, PhD, from Korea University Ansan Hospital.

"It offers a blueprint for moving opaque AI systems toward interpretable and radiologist-supportive tools,” the two wrote. “The key challenge will be to preserve explainability and robustness while improving the sensitivity of the model for detecting subtle MRI findings such as nonmass enhancement."

The full study can be read here.