A deep-learning algorithm successfully detected breast cancer on screening mammography, in some cases as early as two years before it was diagnosed during conventional interpretation, according to research published online January 11 in Nature Medicine. The algorithm also performed well with digital breast tomosynthesis (DBT) exams.

Researchers from RadNet subsidiary DeepHealth developed an artificial intelligence (AI) algorithm using a progressive training approach that mimicked how humans learn to perform difficult tasks. They found that the resulting model significantly outperformed five human readers and also demonstrated strong generalizability across different populations and equipment types, according to lead author and DeepHealth co-founder and Chief Technology Officer Bill Lotter, PhD.

"Our results point to the clinical utility of AI for mammography in facilitating earlier breast cancer detection, as well as an ability to develop AI with similar benefits for other medical imaging applications," Lotter said.

Progress has been made in applying deep learning to the task of breast cancer detection, but there remains meaningful room for improvement, particularly in developing methods for DBT and for demonstrating widespread generalizability, according to the researchers. They sought to address the challenges of producing a high-performing AI model by progressively training an algorithm on a series of increasingly difficult tasks.

"By leveraging prior information learned in each successive training stage, this strategy results in AI that detects cancer accurately while also relying less on highly-annotated data," Lotter said in a statement. "Our approach and validation encompass DBT, which is particularly important given the growing use of DBT and the significant challenges it presents from an AI perspective."

After training the algorithm on five large datasets from the U.S., U.K., and China, the researchers compared its performance with five expert radiologists on index exams (mammograms acquired up to three months prior to biopsy-proven cancer) and "pre-index" studies (mammograms acquired 12-24 months prior to the index exam and were interpreted as negative in the clinical setting). These cases were gathered from a different population than was used to train the model.

On the index set (131 cancer exams and 154 confirmed negative exams), the algorithm yielded higher performance than the five readers, as indicated below:

- 14.2% absolute increase in sensitivity (at the average reader specificity)

- 24% absolute increase in specificity (at average reader sensitivity)

The differences were statistically significant (p < 0.0001).

In addition, the algorithm also outperformed the readers on the non-index set (120 cancer cases exams and 154 confirmed negative cases), as follows:

- 17.5% absolute increase in sensitivity (at the average reader specificity)

- 16.2% absolute increase in specificity (at the average reader sensitivity)

These differences were also statistically significant (p = 0.0009 and p = 0.0008, respectively). What's more, the algorithm -- at 90% specificity -- would have flagged 45.8% of the missed cancer cases for follow-up, according to the researchers.

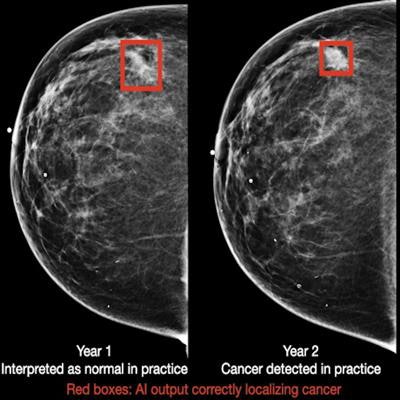

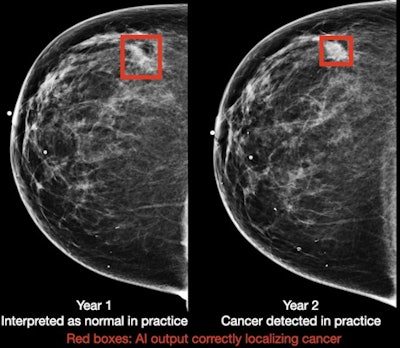

Breast cancer case detected by AI algorithm on mammogram one year before it was diagnosed by a radiologist. Image courtesy of DeepHealth.

Breast cancer case detected by AI algorithm on mammogram one year before it was diagnosed by a radiologist. Image courtesy of DeepHealth.To assess the generalizability of the model, the researchers also evaluated its standalone performance on five large datasets that spanned multiple populations (Oregon, U.K., China), equipment vendors (Hologic and GE Healthcare), and modalities (digital mammography and DBT). It produced areas under the curve ranging from 0.927 to 0.971 in the digital mammography datasets and 0.922 to 0.957 for the DBT datasets.

"One particular reason why our system may generalize well is that it has also been trained on a wide array of sources, including five datasets in total," the authors wrote. "Altogether, our results show great promise towards earlier cancer detection and improved access to screening mammography using deep learning."