A new display interface for radiology workstations combines virtual reality with a traditional 2D interface to provide simultaneous 2D and 3D visualization of medical images. Using it helped radiologists improve their diagnostic performance in a study published in the February issue of the Journal of Digital Imaging.

Researchers from Australia and New Zealand developed a hybrid interface for examining, marking, and rotating medical images in both 2D and 3D at the same time. They found that medical students and radiology residents who examined CT scans on the hybrid interface were able to diagnose scoliosis more accurately than when using a 2D or 3D interface alone (J Digit Imag, February 2018, Vol. 31:1, pp. 56-73).

Combining 2D and 3D input provides substantial benefits for diagnosis, including increased efficiency for novice users (medical students) and increased accuracy for both novice and experienced users (radiology residents), first author Harish Mandalika told AuntMinnie.com. Mandalika is a graduate student in the Human Interface Technology Lab at the University of Canterbury in Christchurch, New Zealand.

"[The interface] can offer significant advantages to a subset of diagnostic radiology tasks that rely on some level of 3D manipulation such as measuring the angle/displacement of broken bone segments, measuring brain aneurysms, etc.," he said. "I also believe that the hybrid interface would largely benefit novice users and can be used as a learning/training tool."

2-in-1 visualization

Evaluating medical images in 3D by rotating them in one or more of the three anatomical planes is common practice for 3D modeling, surgery simulation, and computer-aided design, and it is occasionally applicable for radiological diagnosis, according to the authors. More often than not, conventional radiology software relies on a 2D interface and input devices, such as a computer mouse, to perform this task. But moving images in 3D with a mouse within the framework of a shallow-depth-of-field 2D interface can be challenging.

Prior research has explored the potential benefits of using a 3D interface rather than a 2D one to examine virtual objects in 3D. Looking instead to unite both formats for diagnostic radiology, Mandalika and colleagues developed an interface that allows for simultaneous 2D and 3D visualization of medical images, with a stylus used for image manipulation.

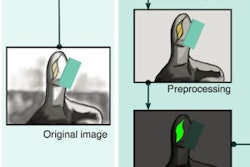

The hybrid interface combines a traditional 2D interface with a virtual reality device for synchronous 2D and 3D viewing of medical images. Image courtesy of Harish Mandalika.

The hybrid interface combines a traditional 2D interface with a virtual reality device for synchronous 2D and 3D viewing of medical images. Image courtesy of Harish Mandalika.This 2D/3D hybrid interface consists of a virtual reality device (zSpace AIO, zSpace) combined with a standard 2D display, mouse, and keyboard -- all of which is connected via a single workstation computer. The 3D component includes a stereoscopic display embedded with motion-tracking cameras, polarized glasses, and a 3D stylus.

To begin, the user first needs to open an image dataset with a proprietary computer application in the 2D part of the interface, which the researchers designed to resemble a standard radiology diagnosis tool. Once the images are uploaded, a marching cubes algorithm automatically extracts a 3D model from the images in real-time and displays it on the 3D interface of the virtual reality device.

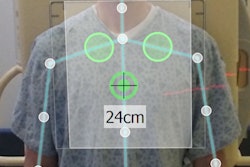

By moving and clicking the stylus, a person wearing the virtual reality glasses can rotate and annotate the images, as well as perform a number of additional tasks such as collecting measurements and marking regions of interest. The position and orientation of the object in the 2D and 3D displays are synchronized.

Thus, uniting the 2D and 3D interfaces into a single setup provides users with the collective advantages of either of them alone, Mandalika said.

Improved diagnostic performance

After developing their hybrid interface, the investigators tested its applicability in radiology -- specifically for diagnosing scoliosis -- and compared its outcome with a 2D interface as well as a 3D interface.

"The goal of this evaluation was to determine the effectiveness of our hybrid interface for a specialized radiological diagnosis task," the authors wrote. "We wished to test the effect of providing an easier means for rotating the arbitrary plane, on task performance and accuracy, compared to using the 2D mouse."

In the trial, 21 fourth-year medical students and 10 radiology residents obtained a scoliosis angle measurement from three different sets of abdominal CT scans using a distinct interface for each set. The medical students had no experience using diagnostic tools and the radiology residents had one to two years of experience using a 2D interface. Each participant observed a five-minute interface demonstration and completed a five-minute training session before beginning the diagnostic task.

On average, the medical students completed the assignment 51.3 seconds faster (p < 0.0005) when using the hybrid interface instead of the 2D interface, whereas it took the residents 44.7 seconds longer (p = 0.012) when using the hybrid interface. The medical students ended up completing the task with the hybrid interface 69.96 seconds faster than the residents (p < 0.0005).

Regardless of these differences in speed, the diagnostic accuracy was highest for medical students and residents alike when they used the hybrid interface, which helped decrease the absolute error associated with using the 2D interface by 4.8 (p = 0.0005) for students and by 1.7 (p = 0.01) for residents.

| 2D, 3D, and hybrid computer interfaces for assessing scoliosis | ||||||

| Averages | Medical student | Radiology resident | ||||

| 2D | 3D | Hybrid | 2D | 3D | Hybrid | |

| Time to complete task (seconds) | 167.1 | 142.9 | 115.8 | 141.1 | 254.4 | 185.8 |

| Absolute error | 7.1 | 2.8 | 2.3 | 3.9 | 3 | 2.2 |

"Using our hybrid interface, [the students'] accuracy improved significantly and was even comparable to that of the residents," the authors wrote. "We believe this has major implications for improving diagnostic radiology training."

Added functionality

What's more, most of the users preferred the hybrid interface because it combined components from the 2D and 3D interfaces, including the conventional 2D tools (for annotations and measurements), the stylus for 3D slice manipulation, and a synchronized view of the 2D scans and 3D model.

Though the hybrid interface did appear to improve diagnostic accuracy and ease of use, the present study only examined the performance of a limited number of radiology residents. Continuing studies with a larger group of radiologists tasked with diagnosing a variety of conditions would further affirm these findings, according to the authors.

The hybrid interface is currently commercially available as part of the Medipix All Resolution System (MARS) Vision workstation (MARS Bioimaging).

Since the study was sent for publication, the researchers have been working on adding more functionality to the tool, Mandalika said.

During testing, it was challenging for some users to perform precision-based tasks -- such as annotating and measuring -- using the stylus, he noted. Most participants had to rest their elbow on the table or use their nondominant hand to support their dominant hand for stability. This observation encouraged the researchers to look into applying to their interface the Precise and Rapid Interaction Through Scaled Manipulation (PRISM) technique by Frees et al, which decreases the motion sensitivity of virtual objects as users' hand movements become more precise.

They are also considering implementing a voice control option to facilitate switching between 2D and 3D modes, as well as tracking users' hand and head movements to shed more light on user behavior and potential long-term learning effects of using the interface.

"We are looking at other applications for the tool such as orthopedic surgery planning," he said. "We are also looking at extending the 3D experience beyond the single user by screen mirroring, 3D scene mirroring, or augmented reality."