A multinational team of researchers has developed standardized image processing techniques that show promise for significantly improving the reproducibility of AI-based radiomics analysis.

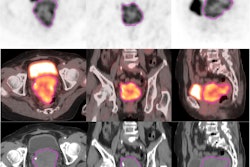

In a three-phase study conducted as part of the Image Biomarker Standardization Initiative (IBSI), 15 teams from seven countries developed a set of eight standardized software “filters” for radiomics analysis and then tested their performance on a multimodal imaging set of CT, FDG-PET, and MR images. With use of these filters, 94% of radiomics features were deemed to be reproducible.

Co-first authors Philip Whybra, PhD, of Cardiff University in the U.K., and Alex Zwanenburg-Bezemer, PhD, of National Center for Tumor Diseases Dresden in Germany, and colleagues shared their results in an article published February 6 in Radiology.

“The use of these standards ensures that imaging AI is easier to reproduce and validate externally, paving the way for the most promising AI systems to be introduced into the clinic,” Zwanenburg-Bezemer told AuntMinnie.com.

Before an AI algorithm can analyze a medical image, several processing steps need to be performed by software. However, these approaches often suffer from reproducibility problems.

“Interestingly, we observed that you often get different results by using a different software package, even for the same image and performing the same processing steps,” Zwanenburg-Bezemer said. “This lack of reproducibility has a huge consequence: scientists cannot accumulate sufficient evidence for many interesting applications of imaging AI in clinical settings.”

Standardized filters

Fortunately, standards for image processing software can substantially reduce these differences. Following up on their previous work that standardized several processing steps, the researchers focused on convolutional image filters in this study.

These types of software filters, which enhance aspects of images to facilitate radiomics analysis, also constitute the main building block of convolutional neural networks – the most commonly used AI architecture for medical imaging analysis, he noted.

“The difficulty is figuring out what the “correct” implementation of various image filters is, and we spent many months iterating on this,” Zwanenburg-Bezemer said.

Using digital phantoms, 15 teams first established 33 reference-filtered images of 36 filter configurations. Of these teams, 12 had developed publicly available radiomics software.

In the second phase of the study, the researchers utilized 22 filter and image processing configurations to derive reference values for 323 of 396 radiomics features extracted from filtered chest CT images. They then assessed the reproducibility of the resulting eight standardized convolutional filters on a public multimodal imaging dataset of 51 soft-tissue sarcoma patients from the Cancer Imaging Archive.

Reproducible results

Across nine teams, 458 (92.4%) of 486 radiomics features were found to have good-to-excellent reproducibility with these eight filters, yielding lower bounds of 95% confidence intervals for intraclass correlation coefficients greater than 0.75.

“In conclusion, we standardized eight types of convolutional filters for radiomics to ensure that the enhanced clinical insights that can be gained through their use can be validated and reproduced,” the authors wrote. “Going forward, developers should ensure compliance of their software with the proposed reference standards, and users are encouraged to use compliant software.”

The group has also posted a web-based tool to assist in checking compliance with these reference standards.

In the next phase of their work, the researchers are now working on standardizing image processing systems commonly used in deep-learning algorithms.

Their full research article can be found here.

“[Apart] from working toward narrowing the translation gap between research and clinical use, the authors’ efforts toward standardization and reproducibility of convolutional filters also partially prevents wasted efforts in research,” wrote Merel Huisman, MD, of Radboud University Medical Center in Nijmegen, Netherlands, and Tugba Akinci D’Antonoli, MD, of the University of Basel in Switzerland, in an accompanying editorial. “Transparency and a diverse, interdisciplinary collaboration among researchers, vendors, and clinicians are the other major pillars in which we as a society need to invest to make an impact on patient care.”