Maybe your first time was watching frighteningly real-looking dinosaurs or incredibly lifelike animated toys, or perhaps it was in a virtual experience as an NFL quarterback on a Sony PlayStation 2. Most of us have been amazed in one way or another by 3D volume rendering. Far from Hollywood and video gaming, another realm is emerging in which we are increasingly amazed.

Applying this technology to medical imaging is changing the perspective of modern medicine and patient treatment, not just visually, but in actual patient diagnoses, treatments, and outcomes. Almost every physician has or will soon have an amazing experience when this technology directly impacts clinical practice. This 3D technology is still in the beginning stages but appears to be on the same unstoppable course of ubiquity that we have seen in Hollywood.

High-resolution imaging modalities, such as MRI and CT, combined with advances in computer technology, have prompted a renewed interest and led to significant progress in the volumetric reconstruction of medical images. Many medical and scientific groups are currently assessing such techniques for various clinical applications and medical educational interpretations. There are many clinical applications that show significant potential for using volumetric rendering of medical images. Such applications include the following:

- Diagnostics

- Preoperative planning

- Intraoperative navigation

- Postoperative validation

- Training

Dimensional developments

Recent developments in computer technology have fundamentally enhanced the role of medical imaging, from diagnosis to computer-aided surgery. Over the past two decades, 3D rendering of medical datasets has matured from its initial use as an occasional data-presentation tool to a routine application in many clinical procedures. Today, with rapid advances in high-speed graphic computers, gathering and using clinical data is not as time-consuming and expensive a task as it once was, thus creating a new vision for 3D imaging applications in medicine and a new definition for computer-aided detection (CAD) and computer-assisted surgery.

Today, the clinical applications of rendering 3D images have evolved from simple "animation" techniques to rendering the lifelike performance of tissues. Physical models of skin and muscle structures are being derived based on mathematical constructions and geometric calculations. Modern operating rooms have adapted real-time volumetric navigation techniques that incorporate the fusion of the 3D image with the patient's physical anatomy. In addition, recent progress in computer technology has made preoperative planning a powerful tool for teaching surgical techniques, as well as deciding on which surgical techniques to employ. Furthermore, postoperative validation from the 3D data is becoming a valuable aid for physicians in following up on their surgical procedures.

Since the introduction of computer-aided tomography in the 1970s, accurate visualization and measurement of the internal organs and their spatial relationships to one another have been two of the main goals of medical imaging. A new trend emerging from the availability of the new computational resources is the increased feasibility of fast, volumetric data acquisition and reconstruction, thus providing the means for an efficient 3D-based analysis of the data.

Sources of patient data for advanced visualization

To obtain a 3D model of the patient's physical or functional anatomy, a set of spatially contiguous and aligned 2D axial slices of the region of interest is often needed. These slices can be acquired from various imaging sources. The most widely used sources of imaging for 3D reconstructions are MRI, MR angiography (MRA), functional MR (fMR), CT, CT angiography (CTA), PET, and ultrasound imaging.

The data can be reconstructed in 3D regardless of the actual source of imaging. However, the validity of the 3D reconstructed model is directly proportional to the precision with which the data or the region of interest has been segmented. Isolating the region of interest from the dataset is a problem that has intrigued many scientists and researchers throughout the world. This problem can be simplified in certain cases if the image acquisition is conducted with 3D reconstruction in mind.

For instance, MRI focuses on hydrogen in the body to produce images for diagnosis. An MRI protocol called "fat saturation" can be particularly helpful for 3D image reconstruction since this technique eliminates fat and bone thus relieving the user of manually segmenting out the fat and bone (which has no hydrogen present) from the image prior to reconstruction. Fat saturation is a focused technique that selectively saturates the fat protons prior to acquiring data as in standard sequences. The fat protons then produce an insignificant signal, resulting in the removal of fat from the acquired image.

A number of different techniques are available for this purpose. This technique is ideal for a 3D analysis of the brain since it eliminates fat from the image. Elimination of fat and bone from the MR images allows for the 3D visualization of the brain and the internal organs without a need for segmenting out the skull from the image.

Volume rendering for advanced visualization

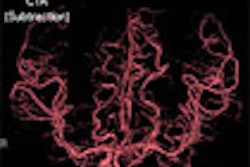

Volume rendering is a technique for displaying sampled scalar or vector fields without fitting any geometric primitives to the data. Instead, the volume is sampled directly with a set of x-rays (electron beams) emanating through the object. As each ray is traversed, the optical properties along the path are computed. These may include absorption, reflection, refraction, and emission. In addition, it is possible to devise modes that do not have a natural physical interpretation but nevertheless can provide useful information (see figure 1, below). For example, maximum intensity projection (MIP) displays the brightest voxel along each traversed ray and is commonly used to diagnose angiographic studies.

|

| Figure 1 |

However, many newer algorithms process some or all of the rays simultaneously, taking advantage of spatial coherence and the locality of the reference to improve performance dramatically. This approach is particularly attractive on platforms capable of hardware texture mapping. Drawing a texture-mapped plane perpendicular to the line of sight produces identical results to sampling a volume with a set of rays under orthographic projection. Similarly, ray casting under perspective projection can be accomplished by drawing a set of texture-mapped concentric spheres centered at the eye (see figure 2, below). It is possible to include an arbitrarily complex lighting model in the texture mapping approach by using a multipass algorithm.

|

| Figure 2 |

The main advantages of volume rendering versus conventional surface-rendering techniques are the high image quality and the capability to preserve the integrity of the original data during the rendering process. Because the data can be sampled and filtered correctly, even small details, which typically suffer from partial volume artifacts, can be imaged adequately. For example, the interface between individual tissues is often spread out and cannot be easily represented as a well-defined surface, but soft tissues are imaged more accurately with volume rendering (see figure 3, below).

|

| Figure 3 |

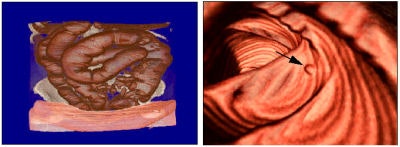

All volume-rendering techniques can be viewed in orthogonal or perspective modes. Orthogonal viewing, which until recently was more popular in 3D clinical applications, casts parallel rays along a chosen viewing direction to render the 3D image from that orientation. Parallel ray casting is mathematically equivalent to locating the "virtual camera" at the infinite distance from the object. The more computationally expensive diverged ray casting has few advantages over parallel ray casting. Figure 4, below, shows an example of perspective rendering and illustrates the capability of the virtual camera to position itself between an obscuring structure and an object of interest. This method allows the observer to advance through the volume and selectively view the embedded pathologies. In some instances it can eliminate the segmentation process otherwise needed to remove the obscuring objects.

|

| Figure 4 |

Clinical applications of advanced visualization

Some of the applications of 3D rendering in medicine are diagnostics, preoperative planning, intraoperative navigation, postoperative validation, education and training, telemedicine, and telesurgery.

Diagnostics

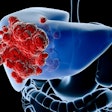

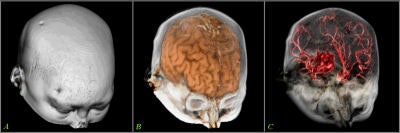

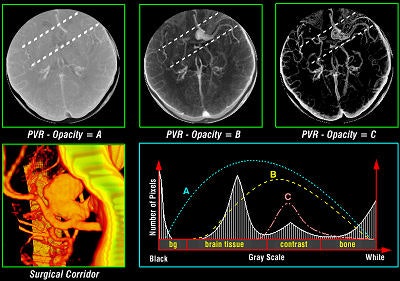

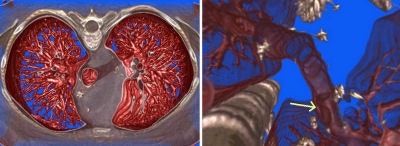

Tomographic examination of pathologies is currently performed by slice-based visual inspection despite the volumetric nature of the anatomical components, tumors/lesions, and imaging modalities. The reason for this could be the radiologist's 2D-based training for distinguishing between normal and abnormal tissue. The introduction of 3D imaging and augmented reality has prompted a huge interest in the utilization of such technology. Virtual endoscopic systems based on perspective rendering have been developed to allow the physician to "fly through" the patient's anatomy. Using such methods, the physician can reduce the opacity of certain tissues (such as the brain) to look at the internal structures (see figure 5, below).

|

| Figure 5 |

To augment the physician's vision during diagnostics and the examination process, numerous visualization and fusion algorithms have been developed. However, there seems to be a considerable discrepancy between information presented as a volumetric image and the information accessible by the clinical analysis of 2D images. These methods also lack standards, from image acquisition to reconstruction. The lack of adequate and reliable segmentation -- visualization standards, statistical analysis, and the training associated with them -- are main factors in the slow progress of the effective utilization of 3D diagnostics in modern clinical practice.

Preoperative planning

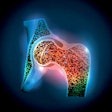

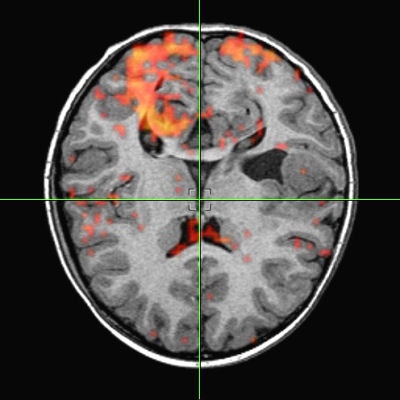

Various volumetric imaging modalities have matured into systems that permit the physician to visualize and quantify the extent of disease in volumetric form to plan therapeutic interventions. 3D imaging techniques greatly enhance a surgeon's ability to create plans prior to surgery, follow preoperative plans during surgery, and modify plans based on intraoperative information (see figure 6, below).

|

| Figure 6 |

Such techniques decrease the amount of invasiveness and exploration during surgery. Researchers have developed software for some operations to aid in presurgical planning and to simulate proposed treatments based on biological models. For example, orthopedic planning systems use a 3D model of the prosthesis in conjunction with the patient's data to determine the optimal placement (location and shape of cavity) for the prosthesis in the bone. Stent graft measurements are made prior to operation to design the graft based on the contours of a vessel.

Path-planning techniques have been applied to neurosurgery to determine the optimal path for a surgical instrument or radiation beam. These methods generally input a geometric description of the relevant structures and a cost function associated with damaging any of these structures. They then produce a surgical plan that minimizes the cost function.

As a complement to path-planning techniques, new developments in registration and real-time sensing are being used to develop systems to aid surgeons in adhering to their preoperative plans during surgery. These systems provide the surgeon with real-time feedback regarding how close the current surgical trajectory is to the planned trajectory. Some systems are now able to enable planning during the surgical procedure.

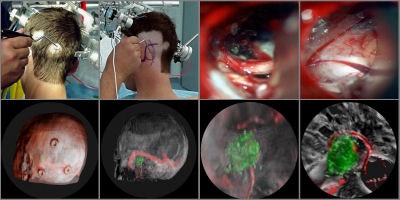

Intraoperative navigation

Intraoperative navigation uses both preoperative images (CT and MR) and intraoperative images (ultrasound and video) to provide localization information during surgery. The main challenge involved in surgical navigation is the registration of the preoperative data with the surgical environment -- that is, with the patient and the surgical instruments. Until now, the usage of 3D imaging during surgery has been limited due to the lack of computation power needed to produce real-time images.

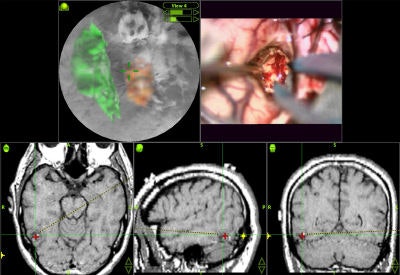

Today, numerous systems augment the surgeon's vision during surgery by providing for the real-time fusion of preoperative volumetric data with intraoperative data, such as video, ultrasound, and images from a surgical microscope and endoscope. Other systems enhance intraoperative visualization by providing feedback regarding the location of the surgical instruments with respect to preoperative data. They use optical or mechanical sensors to localize instruments in the operating room, then map them onto the preoperative images (see figure 7, below).

|

| Figure 7 |

Telesurgical applications underscore the importance of providing a surgeon with tactile and haptic feedback. Tactile sensors placed on surgical instruments can provide feedback to the surgeon via data gloves and virtual surgical tools. Many robotic systems for microsurgery actually augment tactile feedback by scaling sensed forces and resistances up to a level that the surgeon can easily feel.

Postoperative validation

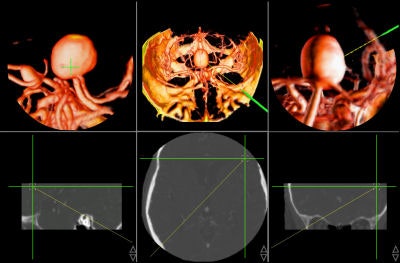

Once the surgery is over, 3D imaging can aid the physician in assessing the success of the procedure. In thoracic aorta studies, volumetric reconstruction provides detailed exploration of the interior of the aorta and its major branches.

Figure 8, below, illustrates one case in which a thoracic aortic aneurysm was repaired with an endoluminal stent graft. The chosen color and opacity scale results in the clear delineation of the stent-graft supports from the aortic wall. This demonstrates the adequacy of the stent-graft placement and highlights the regions where the metallic struts of the stent graft project into the aortic lumen. In patients with subsequent single or bilateral lung transplantation, volumetric reconstruction of postoperative data can illustrate all bronchial structures and assess endobronchial stent placement.

|

| Figure 8 |

Education and training

Tissue models are becoming an attractive alternative to the use of cadavers for understanding anatomical structures and their properties. They are also becoming a vital part of the simulation of certain surgical procedures for the purpose of training.

Biomechanical and physiological models have been proposed to simulate the outcome of various procedures, especially in orthopedics, plastic surgery, and birth delivery. For applications involving surgical training it is desirable to model tissue deformation based on the material properties of the tissues involved to predict how tissues will move in response to the actions of the surgeon.

In neuroendoscopic surgical training, deformable models can help predict the deformation of certain tissues as an endoscope makes contact during an incision. For example, training a resident for a third ventriculostomy procedure involves an endoscope's puncturing through a frontal burr hole, which means that the surface of the tissue must deform with appropriate resistance using the force-feedback device. The endoscope is then advanced and the operator must use the feel of traveling down the third ventricle to locate the appropriate place to puncture. This again involves appropriate tactile feedback -- this time mimicking the movement of the endoscope's tip against the ventricle walls. Then the bottom of the third ventricle is deformed and then punctured with a "pop," and the user sees pulsating cerebrospinal fluid pouring out.

Irreversible evolution for advanced visualization

A great deal of progress and acceptance has taken place in 3D medical imaging, especially in the past three years. However, the completely effective utilization of multidimensional imaging in modern clinical practice is yet to come.

The availability of computer-assisted tools at this time has significantly contributed to a general strategy for achieving a safer and less invasive treatment of pathologies. The design and development of future tools have to be based strictly upon a critical assessment of the strengths and weaknesses of current-generation tools, interdisciplinary clinical requirements, the realization of prototypes, the definition of test beds, and validation via field trials. Undoubtedly, there is an irreversible evolution toward the use of 3D imaging in all its facets, but some of the most challenging tasks still lie ahead.

By Ramin Shahidi, Ph.D.

AuntMinnie.com contributing writer

October 12, 2006

Reprinted from Maximum Vision magazine by permission of Emageon. Ramin Shahidi, Ph.D., is the director of Image Guidance Laboratories (IGL) and a faculty in the department of surgery at Stanford University in Stanford, CA. Shahidi has authored more than 50 scientific publications in the field of medical imaging and currently serves as a deputy editor for the Journal of Computer Assisted Radiology and Surgery (JCARS) and is on the editorial board of the Journal of Computer Aided Surgery (JCAS).

Related Reading

Part II: Medical image processing has room to grow, September 4, 2006

3D penetrates trauma imaging niche, August 24, 2006

3D PET/CT demonstrates virtual vigor, July 27, 2006

Part I: Medical image processing has room to grow, July 4, 2006

Cardiac SPECT/CT fusion captures SNM's Image of the Year, June 6, 2006

Copyright © 2006 Emageon