I often wonder if the practical considerations that we discussed in parts 1 and 2 of the series, paying for AI and ROI variables, have been considered by others when they are pondering adoption of artificial intelligence (AI) technology in radiology. Based on the email responses that I've received to my articles, the answer is yes.

The most eye-opening feedback relates to having AI reports attached to radiologist reports. Trusting in technology is one thing, but few, if any, radiologists I know will include anything in a report without reviewing it first. This includes voice dictation and will, of course, include an AI-generated report.

In fact, more than half of the radiologists I've talked to even review pre-approved (canned) reports to make sure that something didn't get added or lost in translation. AI would be no exception.

Michael J. Cannavo.

Michael J. Cannavo.Can AI provide better patient care? Absolutely. Improved accuracy? Possibly, but at what cost? Will it impact radiologist productivity? Certainly. Report turnaround time? Indeed.

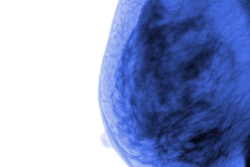

Some studies have shown that using AI will improve diagnostic accuracy with mammography by showing fewer false positives. That's great, but the $38 reimbursement for the professional component already includes the use of computer-aided detection (CAD) software. This means that a delineation of AI versus CAD must be made -- unless the U.S. Centers for Medicare and Medicaid Services (CMS) will accept AI as CAD, which hasn't yet been indicated.

Radiology's experience with digital breast tomosynthesis (DBT) also offers a relevant lesson to consider when projecting the financial impact of AI. Although adding tomosynthesis into the mix nearly doubles the professional reimbursement fee, the additional data it provides also tends to slow down the reading. This basically makes it a financial wash.

Will imaging practices bring in more exams by marketing the use of AI? It's possible, but take mammography as an example: I haven't seen any studies yet that show women will gravitate toward one facility that promotes better technology than another. According to my discussions with radiologists, most women choose a facility based on the personality of the mammographers rather than the machines they use.

Workflow changes

Reviewing an AI-generated report, even in a cursory fashion, takes time. And how and when these reports are read changes the radiologist's workflow. Hopefully this will be factored when the current procedural terminology (CPT) codes for AI finally get published.

Interestingly, most of the preliminary discussions around creating CPT codes for AI have referenced the radiologist having little, if any, involvement in an AI-generated study. Nothing can be further from the truth.

If AI was the only interpretation method used to produce the final report, and there was no involvement at all by a radiologist, I would tend to agree. However, as long as radiologists are required to sign the final radiology report, and they have to read the study, then they will obviously have a significant impact.

It's also important to note that this issue really only applies in the U.S. and may not hold true around the world. Countries with socialized medicine may be much faster adopters of AI technology, especially as it relates to certain applications such as chest films and mammography.

This brings up the points of when and how AI is used: Workflow is everything in radiology. To optimize the radiologist's time, the AI report should be available before the radiologist reads the study. Yes, it's one more item for the radiologist to review. But it beats reading the AI findings after a study has been dictated without the benefit of seeing what AI said and then having to go back and amend the report. This also holds true if AI either finds or doesn't find something the radiologist hadn't commented on. Prefetching protocols must be established to ensure that this workflow is optimized.

If AI is to be used at all, it also must be utilized universally -- not selectively. If one CT brain bleed, one liver and lung cancer assessment, one stroke or other diagnosis is read with the help of AI, then all must be done that way. No picking and choosing is allowed based on the ability to pay or amount paid, if any.

AI processing

Where AI data processing is done is also a major consideration. The most logical place from a technical standpoint is in the cloud; from there, the report can be sent to the patient's folder to be reviewed along with the current study. This also requires some mechanism to be in place that ensures the radiologist has reviewed the AI report, lest it be inadvertently overlooked.

Many AI vendors have suggested that AI processing be performed on-site versus in the cloud. This can certainly be done, but it requires a dedicated image processor. In most cases, it cannot be done on the existing PACS server. Most existing PACS in operation can barely run the PACS efficiently, let alone address a computationally intense application such as AI. Many sites are additionally dealing with the issue of upgrading to Windows 10, as support for Windows 7 ends early next year. This further reinforces the need for handling image processing either off-site or on a dedicated processor.

It's crucial that image processing is performed before the study is selected for viewing. Having to select AI from the radiologist's worklist adds yet another click to an already click-intensive reading process. The time it takes for AI to process the study, send it back, and display the AI-generated report with the images varies widely from vendor to vendor. Yet the commonality with all is that AI adds time, and time is money.

Ideally, AI should be seamlessly integrated into PACS and the electronic health record (EHR) for it to be of greatest value. This requires not just an interface to the AI software but also some custom coding and modifications to the PACS and EHR software. If a certain study is ordered that has AI associated with it, the AI should work in the background. The entire study should not be presented to the radiologist for review until all components, including the AI findings, are available.

Having a seamless integration is a noble goal, but realistically it can take up to two years for any vendor to develop the interface. This timeframe is after the interface makes it to the engineering development project list -- and assumes that nothing else critical takes precedence over it. By the time the interface is ready, there will also probably have been at least five other AI algorithms developed for that specific body part, which can and should be considered. Keep in mind that you can't just remove one task on the product development project plan and replace it with another. The task must first go through the evaluation cycle.

The best AI algorithm?

This leads us to another consideration: how to determine the best AI algorithm. Nearly every day there's a press release published about a new AI algorithm. If you believe the release, everything that came before it is trash, and everything that comes after it can't hold a candle to what has been developed.

This leads to two other questions. First, why make the investment in a particular AI algorithm when a better, faster, cheaper algorithm might be right around the corner? And second, who determines what AI algorithm is best?

With the possible exception of academic facilities, few groups have the time or inclination to review every AI algorithm that has been published. A PACS decision is, at best, made every five to seven years. The AI market, in contrast, can easily be reviewed quarterly, and you can still miss some of the available options. This leads to the question of what criteria should be used in selecting an AI algorithm.

Clinical study results? One recently published study found that nearly 95% of all AI studies were not validated using images that were different from those on which the algorithms were trained. Even worse, none of these studies met the recommended criteria for clinical validation of AI in real-world practice. Reports like this don't exactly instill confidence in AI.

Clinical study sample size? Sample sizes vary widely, from fewer than 1,000 imaging exams to close to 1 million. Is bigger (or more) better for training and validating algorithms? The jury is still out on that. Although you can add another decimal point on clinical confidence, you have to ask if it's worth it.

Cost? This also varies widely and needs to be considered from a total-cost-of-ownership perspective rather than just a per-click perspective. The total cost of ownership includes integration costs, initial or yearly licensing fees (if/as applicable), support costs, etc.

Vendor experience? Nearly every vendor in the AI marketplace has been in business for less than five years. As the PACS market has shown, even long-time players with deep pockets aren't considered a sure thing, so any investment you make is, at best, a short-term one.

Part 4 in this series will address what the market needs to do to be viable, how and when to start with AI, and what's next.

Michael J. Cannavo is known industry-wide as the PACSman. After several decades as an independent PACS consultant, he worked as both a strategic accounts manager and solutions architect with two major PACS vendors. He has now made it back safely from the dark side and is sharing his observations.

His healthcare consulting services for end users include PACS optimization services, system upgrade and proposal reviews, contract reviews, and other areas. The PACSman is also working with imaging and IT vendors developing market-focused messaging as well as sales training programs. He can be reached at [email protected] or by phone at 407-359-0191.

The comments and observations expressed are those of the author and do not necessarily reflect the opinions of AuntMinnie.com.