An AI chest x-ray "foundation model" developed by Google researchers has demonstrated racial and sex-related bias leading to uneven performance, according to a study published September 27 in Radiology: Artificial Intelligence.

Understanding biases in these new types of AI models is essential for their safe and ethical use in health care, the authors suggested.

"We advocate for comprehensive bias analysis and subgroup performance analysis to become integral parts in the development and auditing of future foundation models," wrote lead author Ben Glocker, PhD, of Imperial College London in the U.K., and colleagues.

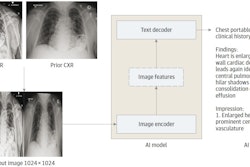

The term foundation model is now widely used to describe pretrained, versatile deep-learning models that can be adapted to a wide range of downstream prediction tasks, the authors explained. Yet despite their increasing popularity, little is known about potential biases encoded and reinforced in these models, they noted.

To that end, the researchers specifically analyzed a chest x-ray foundation model based on more than 800,000 chest x-rays from India and the U.S. proposed in an article published July 19, 2022 in Radiology.

The researchers compared the performance of the proprietary foundation model (SupCon, Google Health) with a reference model they built in evaluating 127,118 chest x-rays with associated diagnostic labels. The group inspected the generated features of the proprietary model for the presence of biases that could potentially lead to disparate performance across patient subgroups.

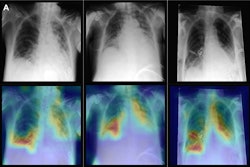

According to the analysis, significant differences were found between male and female and Asian and Black patients in the features related to disease detection.

Specifically, compared with the reference model performance across all subgroups, the foundation model's classification performance on the "no finding" label dropped between 6.8% and 7.8% for female patients, and performance in detecting "pleural effusion" – a buildup of fluid around the lungs – dropped between 10.7% and 11.6% for Black patients, the researchers reported.

"The fact that the investigated [chest x-ray] foundation model encodes protected characteristics more strongly than a task-specific backbone raises concerns, as these biases could amplify already existing health disparities," the group wrote.

Moreover, dataset size alone does not guarantee a better or fairer model, and foundation models should be fully accessible and transparent, Glocker added, in a news release from RSNA.

"As we collect the next dataset, we need to, from day one, make sure AI is being used in a way that will benefit everyone," he concluded.

The full article is available here.