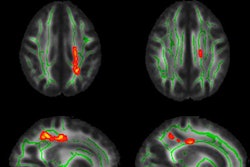

A deep-learning algorithm that analyzed FDG-PET was able to differentiate between normal cognition and dementia. Such models could someday help radiologists diagnose Alzheimer's disease, especially if they don't see the condition often, according to a study presented at the RSNA 2017 meeting.

Researchers from the University of California, San Francisco (UCSF) are exploring whether radiologists using deep-learning models can better determine if a patient has clinical cognitive issues. While there are still a few deficiencies to rectify, the technique has worked well in cases of Alzheimer's disease.

"Deep learning has the potential to improve accuracy of diagnosis among patients presenting with symptoms of dementia, which could influence treatment decisions affecting this population," said lead author Dr. Hari Trivedi, a fellow in the department of radiology at UCSF.

Alzheimer's epidemic

Some 5.5 million individuals in the U.S. have Alzheimer's disease. Estimates from several studies predict that this number could triple by the year 2050, creating an even heavier social, economic, and healthcare burden for the county.

"The diagnosis [for Alzheimer's] can be challenging. It covers a spectrum from normal to mild cognitive impairment and, finally, to Alzheimer's disease," Trivedi told RSNA attendees. "Patients progress at different rates, and the diagnosis is done not only on imaging features but on a full clinical diagnosis, taking into account several patient factors."

Dr. Hari Trivedi from UCSF.

Dr. Hari Trivedi from UCSF.One of the challenges is that the imaging diagnosis in and of itself can be difficult depending on the expertise of the radiologist. Even at UCSF, there can be a "high level of variability in the interpretation skills" of radiologists because the institution sees a small number of Alzheimer's cases, Trivedi said.

"Therefore, we feel that deep-learning models can aid in radiological diagnoses and perhaps even supersede some of these semiquantitative methods that are used now," he added.

His group's objective was to create a deep-learning neural network that is capable of identifying patients with mild cognitive impairment or Alzheimer's disease based on the imaging appearance on FDG-PET and MRI. The model was based on Google's Inception V3 network architecture.

To create and test their deep-learning technique, the researchers started with data from PET brain scans of 982 patients from the Alzheimer's Disease Neuroimaging Initiative (ADNI). They also collected the same information from 110 patients in UCSF's PACS over the past 10 to 15 years.

Many of the UCSF cases overlapped with the ADNI data; those subjects were excluded, bringing the number of UCSF patients in the current study to 42. Among the UCSF subjects were eight with Alzheimer's, seven with mild cognitive impairment, and 27 with no dementia at all.

The model was trained using 90% of the collected ADNI data (approximately 900 cases) and then tested using the remaining 10% (approximately 100 cases). The model was also tested on an independent validation set using 42 cases from UCSF.

Image preprocessing was performed over an entire volume of 2-mm isotropic MRI voxels and cropped to a 20 x 20 x 18-cm3 region centered on the brain. The entire volume was divided into 16 evenly spaced slices and placed into a 4 x 4 grid. The resulting map specifically focused on the inferior frontal lobes and temporal and occipital lobes, where atrophy is known as one cause of dementia.

"This is a poor man's 3D network, which takes the entire volume and maps it onto a 2D projection, rather than building a 3D convolution neural network, which has its own challenges," Trivedi said.

Finally, the researchers performed a receiver operating characteristic (ROC) analysis to evaluate how well the deep-learning model could distinguish between patients with normal cognition, mild cognitive impairment, and Alzheimer's disease.

Deep-learning diagnoses

Based on the ADNI test, the deep-learning model achieved its highest marks in differentiating between patients with normal cognition and those with Alzheimer's disease. The model also performed well in separating patients with mild cognitive impairment from those with Alzheimer's. It fell short, however, in distinguishing between normal cognition and mild cognitive impairment.

| Deep learning's ability to differentiate dementia types | |

| ADNI test | ROC value |

| Normal vs. Alzheimer's | 0.90 |

| Mild cognitive impairment vs. Alzheimer's | 0.84 |

| Normal vs. mild cognitive impairment | 0.69 |

"The tricky one clinically, obviously, is normal cognition versus mild cognitive impairment, which is a much more subtle difference, at only 0.69," Trivedi said.

As for the institutional UCSF dataset, the model was excellent in detecting Alzheimer's and normal cognition. However, it performed poorly in identifying mild cognitive impairment. Trivedi suggested the result could be due to the small sample size.

| Deep learning's ability to diagnose Alzheimer's | |

| UCSF test | ROC value |

| Alzheimer's disease | 0.98 |

| Normal cognition | 0.84 |

| Mild cognitive impairment | 0.52 |

Moving forward, Trivedi and colleagues plan to develop a 3D convolution neural network to improve the current performance of the deep-learning model to better distinguish between degrees of dementia.