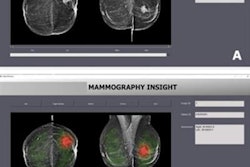

Researchers from Oak Ridge National Laboratory (ORNL) in Oak Ridge, TN, have used artificial intelligence (AI) technology to analyze how radiologists read mammograms, according to research published in the July issue of the Journal of Medical Imaging.

Mammography interpretation is subject to context bias, or a radiologist's previous diagnostic experiences, wrote senior author Georgia Tourassi, PhD, and colleagues. They used an artificial intelligence tool to assess the level of bias in radiologist mammography interpretations (J Med Imaging, July 2018, Vol. 5:3, 031408).

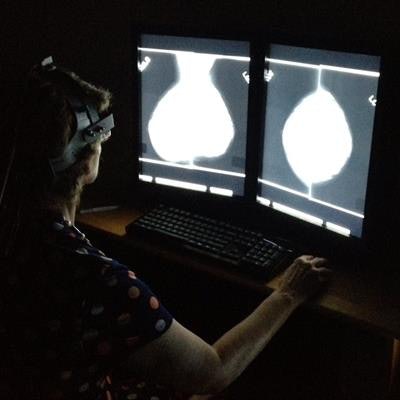

The group tracked the eye movements of three board-certified radiologists and seven radiology residents who analyzed 100 studies taken from the University of South Florida's Digital Database for Screening Mammography (DDSM). The 400 images included a mix of cancer, no cancer, and findings that appeared to be cancer but were benign.

The readers wore a head-mounted eye-tracking device that recorded their "raw gaze data," or overall visual behavior. Tourassi and colleagues then calculated a measure called fractal dimension on each radiologist's map of eye movements and performed statistical calculations to determine how the eye movements of the participants differed from exam to exam.

The researchers found that the radiologists' analyses of mammograms were significantly influenced by context bias, and while radiology trainees were perhaps most vulnerable to the phenomenon, even more-experienced radiologists fell victim to some degree.

A radiologist outfitted with a head-mounted eye-tracking device examines a mammogram. Image courtesy of Hong-Jun Yoon/Oak Ridge National Laboratory, U.S. Department of Energy.

A radiologist outfitted with a head-mounted eye-tracking device examines a mammogram. Image courtesy of Hong-Jun Yoon/Oak Ridge National Laboratory, U.S. Department of Energy."These findings will be critical in the future training of medical professionals to reduce errors in the interpretations of diagnostic imaging and will inform the future of human and computer interactions going forward," Tourassi said in a statement released by ORNL.