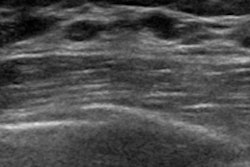

A deep-learning model that uses vision transformers for image classification can automatically classify breast ultrasound images as benign or malignant, according to research published September 2 in Academic Radiology.

George Zhou and Bobak Mosadegh, PhD, both from Weill Cornell Medicine in New York, found that their model, which incorporated both transfer and ensemble learning approaches, achieved high accuracy when tested on both benign and malignant breast findings from ultrasound images.

"Both transfer learning and ensemble learning can each offer increased performance independently and can be sequentially combined to collectively improve the performance of the final model," the two wrote. "Furthermore, a single vision transformer can be trained to match the performance of an ensemble of a set of vision transformers using knowledge distillation."

Breast ultrasound is the go-to supplemental imaging modality for confirming suspicious findings on screening mammography. But the technology tends to flag common, nonpalpable benign lesions, resulting in unnecessary invasive biopsies, according to the authors.

Previous studies suggest that applying deep learning to breast ultrasound can better help classify findings. Zhou and Mosadegh more specifically highlighted the potential of the vision transformer in delivering on this improvement. The vision transformer consists of a self-attention mechanism, and for breast ultrasound, it has shown to be comparable or better performance compared with convolutional neural networks (CNNs). The researchers sought to build upon this previous research to further improve breast ultrasound classification performance.

To develop their deep-learning model, Zhou and Mosadegh obtained single-view, B-mode ultrasound images from the publicly available Breast Ultrasound Image (BUSI) dataset. They compared the performance of vision transformers to that of CNNs, followed by a comparison between supervised, self-supervised, and randomly initialized vision transformers.

From there, they created an ensemble of 10 independently trained vision transformers and compared the ensemble's performance to that of each individual transformer. Using knowledge distillation, the team also trained a single-vision transformer to emulate the ensembled transformer. The final dataset included 437 benign and 210 malignant images.

Using fivefold cross-validation, the researchers found that both their individual and ensemble models had high performances in classifying breast ultrasound findings compared to the ResNet-50 CNN system, which underwent self-supervised pretraining on ImageNet. The ensemble model showed the highest area under the receiver operating characteristics curve (AuROC) and area under the precision recall curve (AuPRC) values.

| Comparison between breast ultrasound classification models | ||||

|---|---|---|---|---|

| Measure | CNN model | Independently trained vision transformers (average) | Distilled vision transfomer model | Ensemble model |

| AUROC | 0.84 | 0.958 | 0.972 | 0.977 |

| AUPRC | 0.75 | 0.931 | 0.96 | 0.965 |

The study authors suggested that the high performance of the ensemble model can be attributed to the "multiview" theory of data, where deep-learning models can learn to correctly classify an image based on a subset of multiple views during training. This includes "learning" about different imaging features associated with benign and malignant lesions.

"By ensembling these models, a more comprehensive collection of views is represented, leading to superior test set accuracy," the authors wrote.

They also called for future studies to determine how binary predictions from a deep-learning model can help guide final BI-RADS categorizations, which would be particularly useful for upgrading or downgrading BI-RADS 4 for biopsy recommendations.

The study can be found in its entirety here.