While measuring radiologists' competency can be a beneficial process, the lack of a standardized evaluation system may also make it a flawed one.

At the University of Michigan Health System in Ann Arbor, radiologists in the chest x-ray department are evaluated every two years for competency and their rate of misdiagnosis. Dr. Philip Cascade shared the results of the department's latest survey at the American Roentgen Ray Society meeting this month.

"In our chest radiography department, we wanted to determine the individual error rate as a means for testing for competency," Cascade said. "Our theory was based on the assumption that a random sampling of misdiagnoses will represent the total number of errors."

Cases between 1994 and 1998 in which there was disagreement between readers were compiled into a database. The patients, as well as the doctors, were assigned numerical codes in order to maintain privacy, Cascade said.

The cases were then classified into four groups:

- Class I - a true diagnosis could not be made even retrospectively

- Class II - diagnosis was very difficult to make

- Class III - diagnosis should be obvious most of the time

- Class IV - diagnosis should always be made

As an example of a Class I case, Cascade discussed a patient with a history of recurrent lung cancer.

"On the chest radiograph, it was called a recurrent lung cancer in the mediastinum," he said. "On the CT, it was mediastinal fat. Even in retrospect, you would have still called it recurrent cancer."

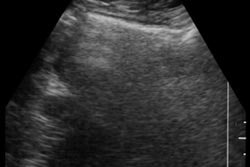

A Class II image was initially called normal, but upon second review, an ill-defined, 5-mm nodule that was camouflaged by a rib could be seen, Cascade said.

The radiologists were then divided into "majors" in chest radiology -- those who read between 32,000 and 58,000 x-rays over six years; "minors" who read between 13,000 and18,500 x-rays; and "non-chest faculty" such as fellows or doctors assigned to the emergency department.

Over the half-dozen years, six chest radiologists read 153,522 studies compared to 391,317 read by non-chest faculty. A total of 218 disagreements in interpretation were reviewed. There were 162 Class I and Class II misses, 43 Class III, and nine Class IV misses. The majority of misses were nodules and mediastinal/hilar masses.

Nearly 13% of the misses were false positives, and the majority of those were potential lung cancers, "so maybe that's good," Cascade said. "We're trying to be more sensitive and not as specific."

Non-chest faculty had significantly more Class III cases, while all nine Class IV cases could be attributed to this same group, he said.

The advantages of this competency-assessment scheme are that it is continuous, involves a high number of cases and a broad range of diagnoses, and has educational benefits. If one individual reviewer had shown a significant number of misses, then more focused reviews of that doctor would have been conducted, Cascade said.

However, the study's drawbacks included case selection and clinical factors such as patient history, disease prevalence, and demographics.

"It's possible that one radiologist had more difficult cases with more pathology," Cascade explained. "We have no way to know if that was true."

Cascade suggested that a standardized lexicon and reporting system, similar to BI-RADS for breast screening, be developed so that these biases can be reduced or eliminated.

"At this time, all the methods for assessing diagnostic capability are subject to some bias. What we need is to develop a computer program which would create a quantitative method for discussing competency," he said.

By Shalmali Pal

AuntMinnie.com staff writer

May 24, 2000

Let AuntMinnie.com know what you think about this story.

Copyright © 2000 AuntMinnie.com