Artificial intelligence (AI) trained to interpret mammograms -- even with larger datasets -- may not be generalizable to diverse patient populations, suggests research published November 21 in JAMA Network Open.

A team led by William Hsu, PhD, from the University of California, Los Angeles (UCLA) found that a previously validated ensemble AI model dropped in performance compared with its reported performance in more homogeneous cohorts and performed worse for subgroups such as Latinx women and women with a personal history of breast cancer.

"This study suggests the need for model transparency and fine-tuning of AI models for specific target populations prior to their clinical adoption," Hsu and co-authors wrote.

While the demand for AI in clinical practice is growing, the researchers noted there still may be issues with underspecification. They wrote that using independent populations to identify models that are at risk of underspecification is a "critical step" for external validation because it could ensure the generalizability of a model before it is clinically adopted.

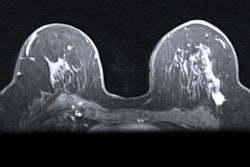

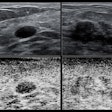

The team sought to assess the performance of a challenge ensemble deep learning method used in the Digital Mammography Dialogue on Reverse Engineering Assessment and Methods (DREAM) Challenge; data included consisted of 37,317 examinations from 26,817 women who underwent routine breast screening under the auspices of the Athena Breast Health Network at the University of California, Los Angeles between 2010 and 2020. The group compared the performance of the method to diagnose breast cancer to that of original radiologist readers and to a combination of the two, assessing capability across a more diverse screening population.

The researchers found that combining the challenge ensemble method with radiologist reading yielded comparable results to radiologist performance alone.

| Performance comparison between radiologist readers and deep learning algorithms for breast cancer diagnosis | |||

| Measure | Radiologist | Challenge ensemble method | Challenge ensemble method plus radiologist |

| Sensitivity | 82% | 54% | 81% |

| Specificity | 93% | 69% | 92% |

| Area under the receiver operating characteristic* | -- | 0.85 | 0.93 |

But the investigators also found that the machine learning models faltered when it came to more specific patient demographics/factors -- which suggests that the models need to be trained with more diverse data. For example, the combined method had significantly lower sensitivity and specificity than the radiologist in women with a prior history of breast cancer. Also, the combined method had significantly lower specificity than the radiologist when it came to Latinx women.

"Our results support the need for increased diversity in training data sets, particularly for women in minority racial and ethnic groups, women with dense breasts, and women who have previously undergone surgical resection," the team concluded.