Artificial intelligence (AI) software can flag suspected intracranial hemorrhage (ICH) cases for radiologists. But do AI algorithms perform as well in the real world as they do in initial testing?

A new study published April 2 in the Journal of the American College of Radiology raises questions about how well results from initial testing can be generalized to actual clinical environments. In a retrospective study that included over 3,600 patients at their institution, researchers from the University of Wisconsin-Madison found that a commercial AI algorithm yielded lower sensitivity and positive predictive value than in previously published studies in the literature.

What's more, radiomics analysis couldn't find any link between CT image quality or textural features to explain the software's decreased diagnostic performance.

"Despite extensive evaluation, we were unable to identify the source of this discrepancy, raising concerns about the generalizability of these tools with indeterminate failure modes," wrote the researchers led by first author Andrew Voter, PhD, and senior author Dr. John-Paul Yu, PhD.

Calculating diagnostic accuracy

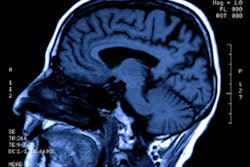

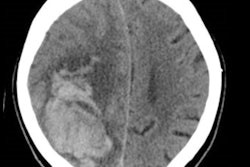

In an effort to assess its diagnostic accuracy at their institution, the researchers retrospectively applied ICH detection software from AI software developer Aidoc to 3,605 consecutive adult patients receiving emergent, noncontrast head CT scans between July 1, 2019, and December 30, 2019. The researchers then compared the software's analysis with the final original interpretation of an attending neuroradiologist with a certificate of added qualification.

Cases that were concordant between the AI and original interpreting neuroradiologist were assumed to be correct for the purposes of the study; discordant results were adjudicated by both a second-year radiology resident and a second neuroradiologist with six years of experience and a certificate of added qualification.

Of the 3,605 cases, 349 (9.7%) had ICH. Overall, the neuroradiologist and AI interpretations were concordant in 96.9% of cases.

The algorithm yielded the following results:

- Sensitivity: 92.3%

- Specificity: 97.7%

- Positive predictive value (PPV): 81.3%

- Negative predictive value (NPV): 99.2%

Lower sensitivity, PPV

Although the AI's specificity and negative predictive value were in line with previous research results, its sensitivity and PPV were lower in this study, according to the authors.

Most of the incorrect flags by the software were false positives -- 77% of which were due to misidentification of a benign hyperdense imaging finding, according to the authors. Overall, the software had 74 (2.1%) false-positive findings and 27 (0.75%) false-negative results, compared with four (0.1%) false positives and six (0.2%) false negatives by the original neuroradiologist.

The researchers then performed a failure-mode analysis to identify the factors that could be contributing to the incorrect AI interpretations. A univariable logistic regression model found that age, gender, prior neurosurgery, the type of hemorrhage, and the number of ICHs were all significantly correlated with decreased diagnostic accuracy. Meanwhile, multivariable logistic regression analysis also found that all of these factors -- except for gender -- significantly contributed to the algorithm's performance, according to the researchers.

Next, the researchers uploaded all cases that were positive for ICH and all cases with discordant results to a server (HealthMyne) for segmentation and computation of CT radiomic and textural features. However, this analysis didn't reveal any systematic differences between concordant and discordant studies, according to the researchers.

"Therefore, it seems unlikely that image quality per se is a meaningful failure mode in our data, although it remains to be seen if AI tools are insensitive to image quality as image quality may have different meanings for machine vision applications versus human visual interpretation," the authors wrote.

The results highlight the need for standardized study designs to enable site-to-site comparisons of AI results, according to the researchers.

"Our work suggests that the development of a common set of rigorous study criteria will facilitate collaboration, helping safely grow the role of AI [decision-support systems] in clinical practice," they wrote.