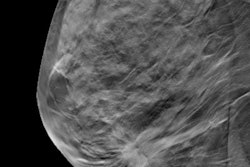

Artificial intelligence (AI) still has a ways to go before it can outperform breast imagers or even be used in clinical settings, according to a literature review published September 1 in BMJ.

A team led by Dr. Karoline Freeman from the University of Warwick in England reviewed 12 studies published in the last decade. The group found that AI had poor quality and low applicability for breast cancer screening.

"Evidence is insufficient on the accuracy or clinical effect of introducing AI to examine mammograms anywhere on the screening pathway," Freeman and colleagues wrote.

Interest in AI's potential to help radiologists detect cancers has grown in recent years, with some studies highlighting its performance outdoing human imaging readers. Other studies have suggested AI combined with human readers could help in more accurately detecting cancerous lesions.

However, some researchers have proposed that AI could exacerbate the harms of screening.

"For example, AI might alter the spectrum of disease detected at breast screening if it differentially detects more microcalcifications, which are associated with lower grade ductal carcinoma in situ," they said. "In such a case, AI might increase rates of overdiagnosis and overtreatment and alter the balance of benefits and harms."

They also point out that AI algorithms do not understand the context, mode of collection, or meaning of images being viewed -- unlike radiologists.

The University of Warwick team was commissioned by the UK National Screening Committee to determine whether there is sufficient evidence to use AI in breast screening practice by reviewing studies published from 2010 to 2020.

Three of the studies compared AI systems with the clinical decisions of radiologists. Of the 79,910 women included, 1,878 had screen-detected cancer or interval cancer within 12 months of screening. Of the 36 AI systems evaluated in these studies, 34 were found to be less accurate than a single radiologist. All systems were also found to be less accurate than double reading.

More promise was seen in smaller studies. The team looked at five studies involving 1,086 women total, which reported that all the AI systems evaluated were more accurate than a single radiologist.

However, the researchers pointed out that these studies were at high risk of bias and their results are not replicated in larger studies.

In three studies, AI was used as a prescreening tool to triage which mammograms need to be examined by a radiologist and which do not. It screened out 53%, 45%, and 50% of women at low risk, respectively, but also 10%, 4%, and 0% of cancers detected by radiologists.

The University of Warwick team called for well-designed comparative accuracy studies, randomized control trials, and cohort studies in large screening populations.

"Such studies will enable an understanding of potential changes to the performance of breast screening programs with an integrated AI system," they said.

Freeman et al also said that one promising path for AI is to complement rather than compete with radiologists.

"By highlighting the shortcomings, we hope to encourage future users, commissioners, and other decision-makers to press for high-quality evidence on test accuracy when considering the future integration of AI into breast cancer screening programs," they wrote.