CHICAGO -- Raise your awareness of the unseen aspects of radiology AI, advised Woojin Kim, MD, who challenged RSNA 2025 attendees to look under the hood of large language models (LLMs) and rethink how they are benchmarked.

Woojin Kim, MD.

Woojin Kim, MD.

For a keynote session on December 3, Kim discussed three hot topics in radiology AI.

First, he reflected on the volume of LLM research papers and the claims they have made. In particular, he highlighted research that has flagged issues such as systemic deficiencies and clinical disconnects, emphasizing evaluation as crucial to advancing AI.

"I am not a fan of testing LLMs with [multiple choice questions] MCQs," Kim said in relation to ChatGPT AI passing the radiology board exam. When an LLM does well on an exam of MCQs, "I don't know if it does that because it truly understands or because of pattern recognition or just rote memorization."

The bottom line? A diagnosis isn't a multiple-choice question.

Synthetic data also raises important questions, according to Kim.

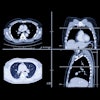

"This technology is so advanced that it can create all kinds of medical images that would fool most physicians, and it's a very exciting technology, but be cautious," Kim said. He featured a paper suggesting AI models can be trained to tell the race of the patient from x-rays.

"If you gave that same test to me and my colleagues, we can't do that," Kim said. "In fact, three years later we still can't figure out how these models are doing what they're doing."

Kim cautioned, "When you augment your dataset ... what hidden biases are you baking in that you can't see?"

In a third example, Kim raised awareness about the importance of clinical domain experience in the development of AI models. Using XrayGPT as an example, Kim called that tool "utter nonsense."

At RSNA 2025, Woojin Kim, MD, illustrated the "utter nonsense" of a GPT model.Liz Carey

At RSNA 2025, Woojin Kim, MD, illustrated the "utter nonsense" of a GPT model.Liz Carey

Kim added that the latest hype is around "thinking" and "reasoning" models. Look for hallucinations, he warned.

Finally, accuracy of AI models is not the same as clinical efficiency, Kim said to close the talk.

"Just because a model is accurate does not mean it will be clinically efficient," Kim noted. "Think beyond the accuracy. As a radiologist, the thing that is most important is clinical utility. If the AI model is not clinically useful, I'm not going to use it."

Kim, a musculoskeletal radiologist at the Palo Alto VA Medical Center, also serves as chief medical officer at the American College of Radiology Data Science Institute. He is also chief strategy officer and chief medical information officer at HOPPR.

The keynote opened a noninterpretive AI track that featured Hayley Briody from Beaumont Hospital in Dublin, Ireland, on patient perceptions of the use of AI in a tertiary referral radiology department.

Among the findings, 67% of respondents still preferred having a radiologist interpret their imaging, even if an AI tool performed with a higher degree of accuracy than the radiologist, Briody noted in her session.

Briody's group also found that 96% of those surveyed wanted the use of AI to be disclosed; 53% wanted to be asked for written consent; and 33% wanted to be verbally informed, although Briody said the applicability of the findings is limited.

"Patients are curious about how AI is being used in their imaging and they would like it to be disclosed," Briody said. Overall, most patients do have a positive attitude towards AI, but they favor it being used as a collaborative tool with the radiologist.

"Most patients are not ready for standalone AI, even if more efficient or more accurate," Briody concluded. However, during the Q&A, she pointed out the importance of exploring patient concerns.

"We're not sure now if the interpretation of that is they're worried about not having a human person to ask questions to," she said. "It might not actually be that they don't want the tool because it is more accurate; they may just be concerned about the implications of that overall, and how it would change their experience with radiology or with their provider."

Visit our RADCast for full coverage of RSNA 2025.